A few weeks ago I wrote an article introducing dataflows in Power BI and discussed why you should care about them. There are a few questions that have been spinning around in my head since then, significantly:

- What implication is there to the Power BI Golden Dataset as a result of the new dataflows?

- Should I extract my queries from my Power BI workbooks and create dataflows instead?

I will comeback to question 1 another time. Today I am going to explain how and why you may want to move your queries to dataflows.

Why Would You Want to Move Queries to Dataflows?

Power Query is a user friendly ETL tool (Extract, Transform and Load). Traditionally ETL has been done using more complicated tools (such as SQL Server Integration Services – SSIS) and the resulting data is stored in a data mart or data warehouse for consumption by anyone that needs a standard view of the data. Power BI Desktop can consume tables directly from a data warehouse and simply load the table into Power BI – dead easy. But Power Query is also a powerful ETL tool in its own right, and it can be used to transform and reshape the source data directly inside Power BI Desktop (and then PowerBI.com). This is very useful if :

- You don’t have a data warehouse and/or

- You need some variation of what is in your data warehouse.

- You have other data sources that are not in a data warehouse but are still important to you.

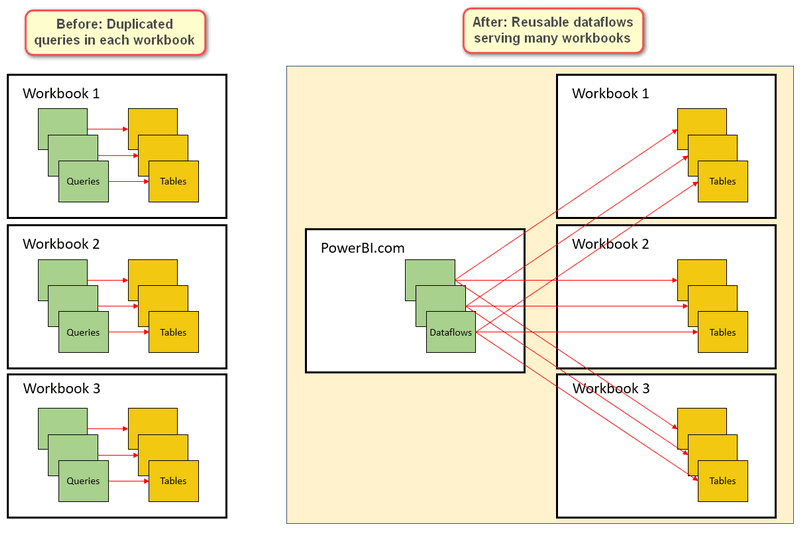

Taking this approach (manipulate in Power Query) is perfectly fine if you have a single workbook, but what if you have 10 similar workbooks all needing the same transformation? Worse still, what if you are one of many people in a company all doing the same thing with multiple workbooks? It is possible that you will end up with hundreds of Power Query queries embedded in workbooks that are either identical or what is possibly worse, maybe even slightly different. One of the things that dataflows allows you to do is remove the Power Query code from the Power BI workbook and make it available for consumption many times into multiple workbooks from the same source. See image below.

How to Move your Queries to Dataflows

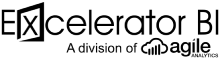

I set out to move my queries from a sample workbooks into dataflows. First I created a new workspace to store my Dataflows (I called it myData). I noticed that when you have an otherwise blank workspace (no workbooks loaded), you will see the welcome screen as shown below. It wasn’t clear to me how to create a dataflow, and it is not intuitive. All you have to do is click “skip” to be able to create a dataflow.

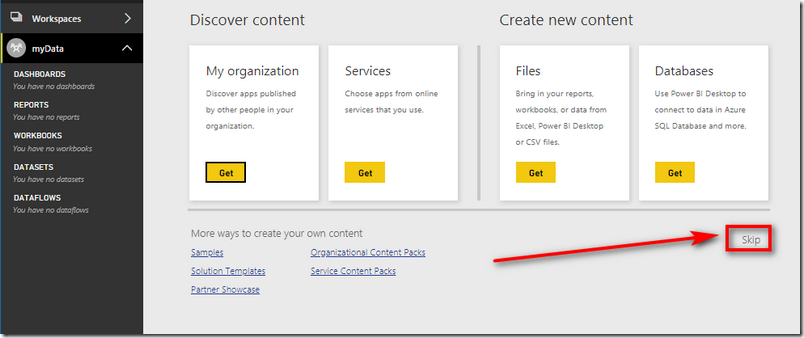

From there I clicked “Dataflows” from the menu at the top of the page and, then the create button.

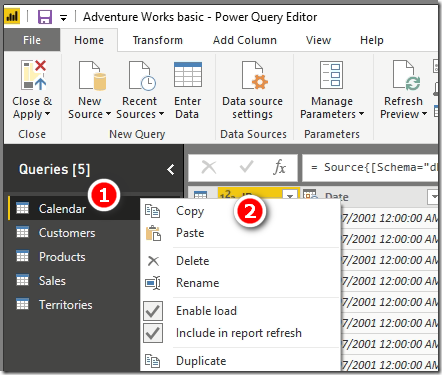

I copied the queries from my current PBIX file one at a time. To do this, I just went into the query editor in Power BI Desktop, right clicked on a query (1 below) and selected copy (2).

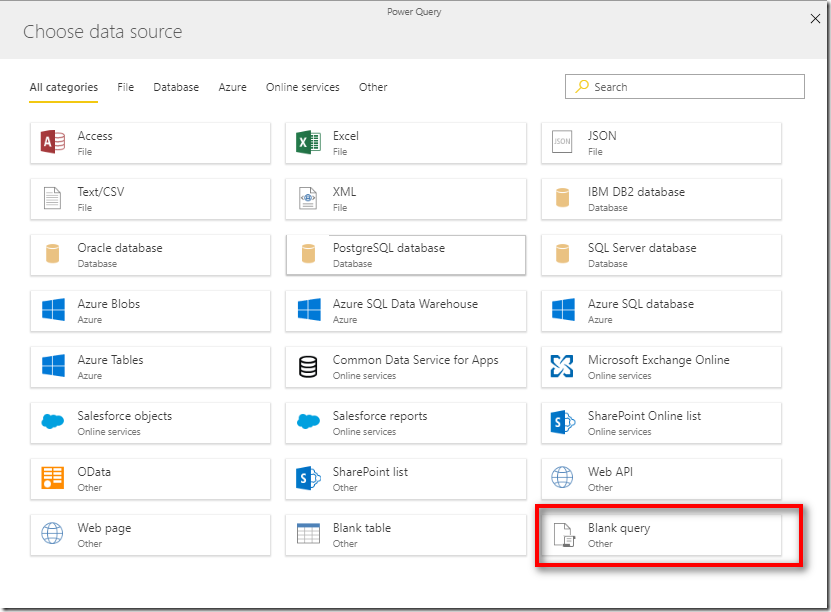

I then went back to PowerBI.com and added a new blank query data source inside dataflows

I then pasted the code from the clipboard directly over the starting power query code in the dataflow as shown below.

In my case I am using a very simple query. Given this case it is probably easier to just create the new queries connecting directly to my SQL database directly inside dataflow rather than using copy and paste. If you had a more complex query, then copy and paste is definitely the right way to go.

When I pasted the code, I was prompted to select a gateway. I already had an enterprise gateway configured to my PC/Server so I selected it from the list. Note: dataflows will not work with a personal gateway. If you have a personal gateway, you may like to consider uninstalling it and installing the enterprise version instead. Don’t be scared, it is not hard at all to do and it is not too different from the Personal Gateway.

I then had to enter my credentials for the PC/Server to make sure the dataflow could access the gateway.

From there it was just a matter of repeating the process for all other tables in my PBIX file. In my case I was just connecting to my SQL Server DB, so it was faster to create a brand new Entity for each of my SQL Tables directly as a new SQL Database Source, so that is what I did.

Create a new SQL connection

To do this, I simply went to new data source, SQL Server.

I then entered the details for the database AND the credentials for the gateway.

Follow the normal Power Query steps to complete the import for the other tables.

New Dataflows Are Ready

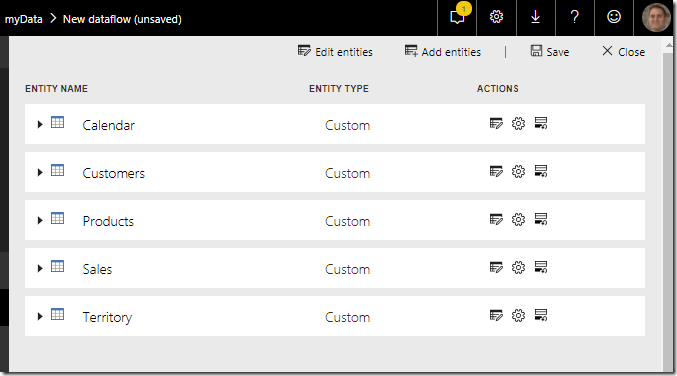

At this point, I had my 5 tables configured as dataflows as shown below. Technically each of these “tables” is called an “entity” inside dataflows. This is a clue that dataflows can do a lot more than just serve up a table to a user (like a data warehouse).

Refresh the Tables

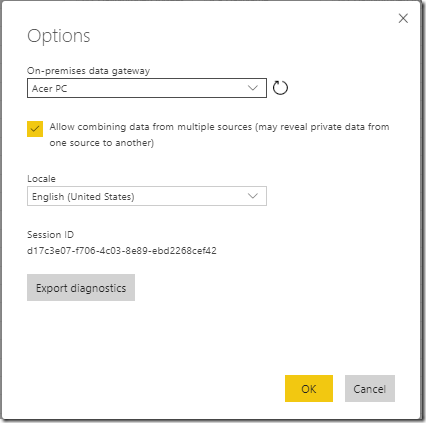

I then saved the dataflow (called it AdventureWorks) and then ran the refresh when prompted. Just a reminder for those of you not in the USA, you will need to change the Locale of your connections so that things such as dates are treated correctly. The default is English US and I need English AUS.

This change only has to be (should be) done once for each data source, but there is currently a bug that is preventing this change from being saved. I know MS is working on this. Given this isn’t currently working correctly, I actually had to change the date columns to data type “text”, otherwise the queries always failed when it found a date like 28/1/2004. Once the data was loaded as text in Power BI Desktop I was able to change the data type back to date again, picking up the correct Locale information from my PC. Hardly ideal, but it is a work around until this bug is fixed.

Re-point the Tables in the PBIX

There are a few ways you can do this, but the easiest way is to get under the hood into the code in Power Query. The process is to:

- Create a new connection to one of the entities in the new dataflow from Power BI desktop (get data\Power BI Dataflows). Repeat for all other tables.

- Then go into Power Query (edit queries) and select the Advanced Editor for the first new query, and copy the M code.

- Then go into the query for the original matching table, go into the Advanced Editor and replace the current code that exists with the new code.

- Repeat the cut and paste process for the other tables

- delete the duplicate queries (the new ones, not the original ones).

The advantages of using this approach include:

- Any calculated columns you have in the table will keep working

- There is no need to move any measures from the tables.

Note: You cannot simply delete the original tables and rename the new tables in Power Query and expect it to work. You must cut and paste the new code into the original table that you want to keep.

Publish the Workbook to PowerBI.com

One REALLY cool feature of dataflows is that I can now publish my PBIX file back to PowerBI.com, but it doesn’t need to be published into the same workspace that contains my dataflows. For this demo, I published my updated version of my workbook to “My Workspace”, not to the myData workspace I created earlier.

Refresh Dataflows and Workbooks

The last thing I did was to set up scheduled refreshes for both the dataflows and the workbooks. Of course I need to set the scheduled refresh for the dataflow to run and complete first, followed by a refresh of the workbook.

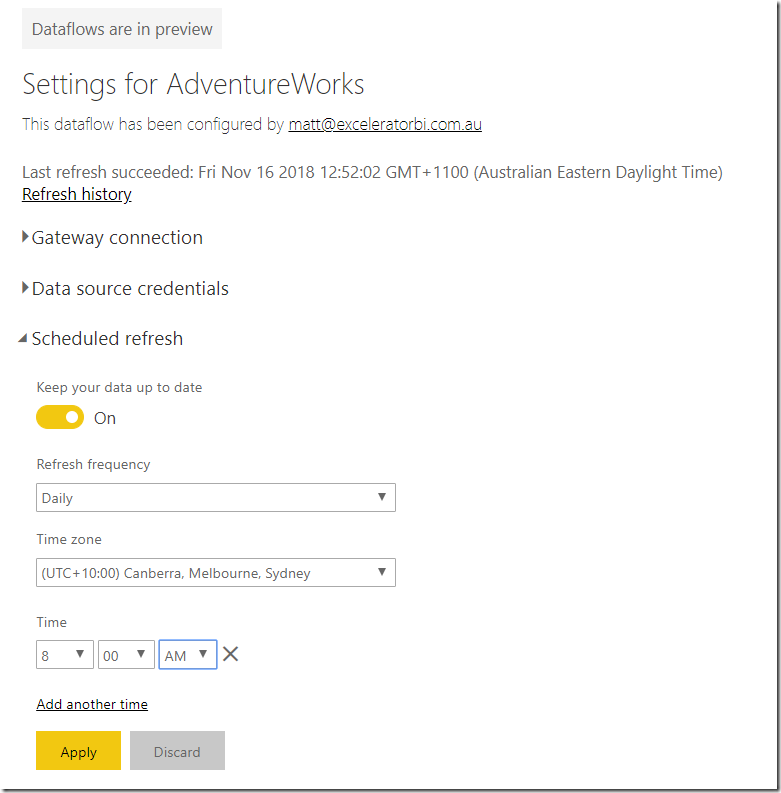

Dataflows Refresh

I switched back to the dataflows workspace and selected schedule refresh.

I set the schedule to refresh each day at 8:00 am

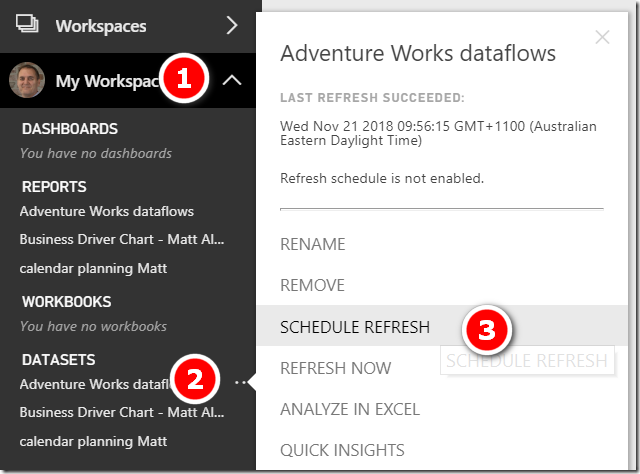

I then switched back to My Workspace and did the same thing with the workbook dataset

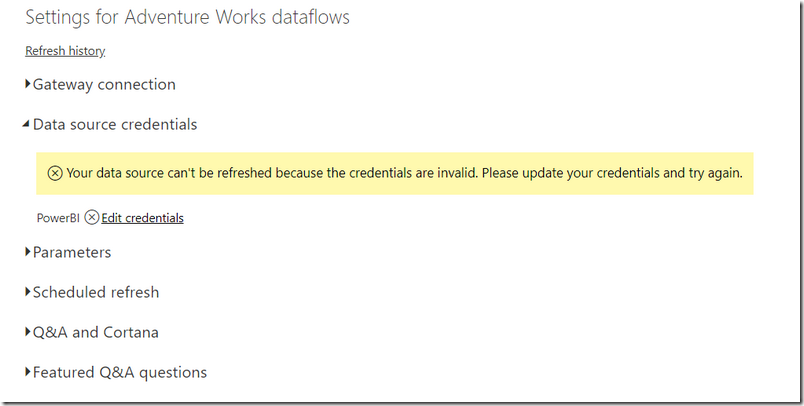

Before moving on, I had to set up my credentials.

I clicked “edit credentials”. The following window popped up. First I was confused about this as I was trying to edit the data in the first 2 text boxes, but that is not what is needed. Just click “sign in” and then log into your account.

After logging in, I was able to schedule the dataset refresh. I set it to 8:30 am giving the dataflow enough time to refresh first.

Benefits

The benefits of doing it this way include.

- You have 1 master query for each table.

- You can load these tables into multiple workbooks reusing a common source.

- If you need to edit the query, you edit it in one place and it will flow through to every workbook.

- Other people can use the same queries (as long as they are given access to the workspace containing the dataflows.

- There is one version of the truth.

- Dataflows can use traditional data warehouses as the source, but can also use Excel spreadsheets and CSV files too. In this sense, dataflows can extend the concept of an enterprise data warehouse to a broader set of data including data not managed by IT.

- There are performance benefits against the gateway, as the gateway is only used once per query instead of potentially many times.

What do you think?

I would love to hear what you think about setting up your queries this way. Do you agree it is a good approach? What other benefits do you see?

Hi Matt,

I am trying to replicate the exact situation described in your article. I have a Power BI report which was connected to an Excel file. I created a Power BI dataflow to automate this using SQL query. I copied and pasted the M code for the dataflow into the old data source and everything works fine. I set both the dataflow and dataset to refresh daily. However, the scheduled refresh for the dataset is failing in Power BI service but if I download the pbix and refrsh it from my desktop, it goes through fine. The error I get in service is the “The keys did not match any rows in the table error”. I would appreciate any guidance on this

This is not simple to debug. My starting assumption is there is something wrong with the Gateway config.

Hi Matt,

I need to rename various tables within a dataflow. Some reports/datasets already use these tables. Will I need to reset and then republish all the reports/datasets that use those? Or does the renaming automatically propagate without any further updates?

If there’s a step-by-step article about this specific question please let me know where that is.

Thanks,

Aaron

My assumption is that you will need to do it manually. There is no “push” from dataflows to workbooks that access those data flows to say “hey, I’ve changed my name”. So I think you are stuck. I would suggest.

1. Make all the changes to names in dataflows, recording from and to names

2. open your workbooks and see what is broken.

3. fix what is broken using the list of changes made in 1.

Hi Matt,

Thanks for posting this. Have you had any success copying over queries which reference other queries e.g. with ‘merge queries’ steps? When following the method you outline above I run into an error (Error: On-prem execution not supported for entity) when trying to refresh queries, although the queries load fine within the dataflow. I don’t have this problem when copying over simple queries that don’t reference other tables or when I recreate queries using the reference method (https://docs.microsoft.com/en-us/power-bi/transform-model/service-dataflows-computed-entities-premium). I have this problem with SQL data and a similar problem with OData.

I wonder if you have experienced this yourself? Many thanks

Dataflows is free to use, as long as you don’t reference other queries. Referencing other queries is a premium feature (unfortunately)

I have decided to copy/paste some Mcoded script from Desktop to Dataflow, BUT i have found a BIG ISSUE. Dataflow does not allow to have entities as LIST, to use it in another Query TABLE or ENTITY

It seems this is called COMPUTED ENTITIES, and it requires to have Premium capacity. Its wayyy tooo expensive for a SME company. Sooo, i will have to still use Desktop

Its a shamee!!

Yes, I agree 100%. Read my rant about that here https://exceleratorbi.com.au/power-bi-licences-changes-good-bad/

Hi Matt,

How do we move Helper Queries (Transform Folders) from Desktop to DataFlow

mmmmm, it’s tricky. The helper Functions are somehow tied to the helper queries. If you move them, I think this relationship will break. I don’t know if there is a solution that will maintain the link

Absolutely brilliant , thanks Matt, Just a quick question though , I am not able to select “View” as Dataflow dataset , After connecting to redshift i can see the views in the list however not able to select , is that a setting issue?

Sorry, I have never connected to RedShift, so I don’t know anything about it

Hi

Thanks for sharing this insight. Please it is possible to create a parameter that will switch between data flows accounts in power BI ? cheers

Good question. Sorry, I haven’t looked at this. But you could test it and report back.

Thanks so much for this Matt, a great time saver!

Thanks for a great post Matt!

Although I am pretty new to PBI and can’t comment yet about the advantages of setting queries up this way, I CAN tell you that your detailed post saved me because that’s exactly what I was trying to do for a week now without success – move queries from PBI desktop to dataflow and then connecting an existing report to it.

Thanks to your post I finally managed to complete this task successfully.

THANK YOU!

Great. I’m glad it helped

Thanks Matt,

I’d like to know more about the “behind the scenes” storage (Azure Date Lake) capacity as a Pro user. When I look at “storage details” on my PBI service account it only shows “dataset” sizes not dataflow sizes. So I’m not sure how my allotment of 10 GB is actually being consumed.

I’ve just tried to move a query from Excel to PowerBI. The query uses a sql statement and then joins to an excel table, plus a few other transformations. Setting up as a dataflow was easy and great. What I then discovered though is that you have to have a Premium membership to enable scheduled refresh. Of course I’m sure I’ll learn plenty by changing my dataflow to bring the raw tables I need through and then working the relationships in PowerBI/Dax 🙂 But as a SQL head I do like to start and think there, so just noting the trap for any others that follow along.

Guess you still have the ‘golden dataset’ approach as well. Create the SQL connection in Power BI desktop, then publish to PowerBI.com?

This is a very helpful topic.

I have invested a great deal of effort to produce some datasets that need to be exported to CSVs. This idea would be helpful, however, we are using PBIRS rather than the service. Part of the reason we don’t use the service is that the data is highly sensitive. I want to give the user the ability to pull the data themselves or push it to them on a schedule without using Power BI but my options are limited without the ability to use the service.

I’ve tried moving the M code to Power Query but Excel runs into memory issues.

What I’d really like is to have an engine that can produce the output for me on a scheduled basis. Does SSIS have the ability to use M? Anything in VisualStudio?

My understanding is the SSIS 2019 has Power Query as a datasource

Matt,

Outside of being able to go across different workspaces, are there benefits to using data flows as opposed to publishing one data model and having all other reports connected straight the power bi dataset? This keeps a “source of truth” in the same fashion. Does it have performance or memory load benefits that would make it worth switching to?

It depends. One benefit of dataflows is that you can set each dataflow to refresh to its own schedule. So you could have Sales refresh every day but set Customer to refresh say once per week. You can’t do this with a golden dataset – they all have to be refreshed on the same schedule. Another option is re-usability of entities (tables) within different models. If you only have 1 model, then this would not be useful, but if you have many models, you will need to replicate the Calendar (for example) multiple times in each model.

Great explanation. I created a couple queries in data flows and tried to append the queries as a new query, it would not allow me to do this and returned a message to say I needed power bi premium. Have you come across this?

No I haven’t seen it and agree it is a bit strange. It is still in preview so my guess is that this is an unintended feature, but I can’t be sure. Maybe someone else here knows?

I asked my mate Gilbert from Fourmoo.com

This is what he said:

That would be due to having the Appended Queries appearing to be computed entities which is a premium feature. In order to Append them together I think if the person had to disable the loading of each of the tables that are going to be appended and then create “Append as New” and have that final table load, it should not require Premium (Computed Entities)

Thanks for taking the time to ask around and posting a reply. Great help.

Hi Matt,

PBI Dataflows s in “preview” now…do you have any idea when it may be generally available?

These (dataflows) tables are being stored in “Azure Data Lakes Store”…I don’t know what that is about and how it works. Any idea?

Sorry, I have no idea how long it will be in preview. Other preview items seem to stay in preview from 3-12 months. Also, preview doesn’t mean things won’t improve before GA. Some things will improve until finally it is in GA. As for the data lake question, see my point 4 in my original article. https://exceleratorbi.com.au/power-bi-dataflows-and-why-you-should-care/

So no, I don’t really know a lot about it. It is a big data storage technology that allows storage of files and unstructured data for rapid processing and retrieval using big data principles. I don’t know more than that

I can certainly see benefits of moving queries to Dataflows. Let’s hope MS provide a more efficient way to do this sometime soon. It is rather painful moving them by copying and pasting one at a time when you have lots of queries.

Yes, I understand and agree. I am sure it will come 🙂

Great post Matt!

What about performance benefits?

Have you test it? Can I expect M Query to be refreshed faster in comparison to the same one in PB Desktop?

I haven’t tested the performance benefits. My guess is that the sync from the dataflow through the gateway will be the same. Then there is another sync from the workspace to the dataflow. This should be super fast. If there are multiple workspaces and workbooks on PowerBI.com, then the gateway is only used once instead of multiple times, so yes you are right – this would be a great benefit.

Thanks! This is interesting. I would definitely try this.

Thanks Matt. Dataflows are really amazing and the lucky ones who are backed by Premium can do much more with them.

Matt, how do you add a data source in a dataflow? It’s not obvious to me how to do that in PowerBI.com

Mim. Yes, there is no RLS on csv as you know. But once the data is loaded into Power BI you have the same RLS capabilities as any other import mode model. Just don’t give the end users access to the dataflows, just give them access to the report.

Ahh, sarcasm!

As a Pro User you have precious 10 GB. Storing both, dataflows and dataset, you are using that space twice.

Hey Frank. What do you mean by “double storage size”?

Great article as usual, concise and easy to follow. Looking forward to the next one regarding golden dataset. With the PBI roadmap looks like dataflows can accomplish this (when it gets close to parity with the full PQ desktop capabilities, and directquery with dataflow), and also PBI cloud dataset in the future (when multiple datasets can be used in same model/report. Exciting times for BI!

Best way to double storage size, while getting rid of query folding.

Good to highlight this as an Architectural pattern – to move the transformation one step closer to the Data source.

Matt

My comment is not at the practical level, but when trying to look at the “Bigger Picture”: Power Query Started as an amazingly easy ETL tool in Excel and is growing in to anther ” MS Monster”

Now I don’t Say I know how to do it better, but I have a bad feeling it could have gone on developing it a better path and that once again (like so many times in a past) Microsoft will loos the mass users, and stay with IT Pro.

Would love to hear what s our opinion

Uriel. My view: There is a big difference between building software that is user friendly for business users and then extending it for more technical purposes too, and building technical software for IT and then trying to make it easy for business users to use. As long as the easy of use is not removed, I am not that fussed if it is extended for IT purposes. Actually I think it is a good thing if Business and IT use the same tools. I learn DAX in Power Pivot for Excel, then I did a 6 month SSAS Tabular project (same core engine). I was fully productive on day 1 and didn’t have to relearn anything. My blog post 2 weeks ago was the first time I had ever used dataflows, and I didn’t need any new training. JMO

Matt

let’s say you are pulling data from SQL server and there is some security implemented, manager store A can’t see the data from Store B, now you export all data using dataflow, now all the data is available for both stores, and because it is CSV file, you can’t implement any restriction, if a user has access to those entities, he will see everything, just worth noting that’s all.

It is great that dataflows can extend the traditional datawarehouses with the speed and scalability of cloud. It would be more better if it is possible to connect with it in Direct Query Mode. The other features that could be included visual flow of the transformation like Azure Data Factory ,able to access the backend Azure Data Lake files, converting csv files to Parquet files and source control integration really create perfect and complete experience with Power BI Platform.

Great Matt,

I will apply this especially for new customers. Looking forward to your next (?) blog discussing the Golden Dataset and Dataflows. Feels straightforward but their is probably a catch 😉

Sorry, I am not sure what you mean.

yes with the caveat that, it is just csv files, so there is no way to implement any kind of security ?

Thanks Matt. Great trailblazing! Helpful for all of us.

Thanks Matt! Important scenario for me. Will give it a go once IT dep enables dataflows in our tenant.

As usual, great content, Matt!