This is a further (fourth) update/edit to this article Power BI Golden Dataset I first wrote in Apr-17. I then updated it again in Jan-18, Jun-18 and again now in May-20 (Jason did a full review this time around). When I update my articles, I change the original post with the latest and best information available at the time – that way only the current/latest version is available online preventing people stumbling on old advice.

The original idea of a Golden Dataset was to

- Load a PBIX into a OneDrive or SharePoint folder

- Import the Golden Dataset into one or more workspaces (each workspace will have an identical duplicate copy of the dataset).

- Rely on the capabilities of PowerBI.com to automatically refresh the dataset in all workspaces everytime the source (step 1 above) is changed.

This is no longer the most effective way of achieving a Golden Dataset. This article explains the more modern approach, which is to use shared datasets instead. Further, the concept of the Golden Dataset now has very wide reaching impacts across concepts that include shared datasets, shared workspaces, certified datasets and lineage views (to name a few). This article has been broadened to cover all of these areas. This is a very long article now, so strap yourself in. Here is an overview of what is covered.

- Statement of the problem to solve.

- Solution overview and setup

- The definition of a golden dataset.

- Publishing a golden dataset options.

- Using datasets across workspaces configuration.

- The golden workspace.

- The golden dataset.

- Maintaining the golden dataset.

- Creating thin Power BI reports, including local measures.

- Publishing thin workbooks.

- Impact analysis and lineage views.

- Creating online only reports.

In the future, this will also include deployment pipelines (Microsoft recently announced this feature, however it is only available for premium users, and thus this information is still valid for those not in a premium space).

The Problem – Too Many Data Models

Power BI has so many great features it seems almost sacrilegious to raise problems that can surface when unleashing this fabulous self service BI tool to the user base. Having said that, one of the problems that can occur in the world of self service BI is the proliferation of slightly different versions of essentially the same data model. The problem is made worse by the fact that you can publish the same data model to multiple workspaces at PowerBI.com, essentially multiplying the problem. Note: data model and dataset are essentially the same thing – I refer to data model when using Power BI Desktop, but when it is published to PowerBI.com it is officially called a dataset.

If you think about it, self service BI (using your own data models) has the potential to be the antithesis of enterprise BI. Instead of having one single data model that is used for all reporting (enterprise BI), you can build individual bespoke data models each time you have a new problem. This is both powerful and problematic. If you are not careful, before too long you can have an unwieldy mess of uncontrollable and unmanageable data models that are essentially the same at the core but with different reports sitting over the top. The concept of a “Golden Dataset” (you heard the term here first in April 2017) is a elegant solution to this problem, allowing use of a single master source (dataset) across multiple reports in Power BI Desktop (and Power BI Service). Let me explain further below.

Added note: Microsoft has already announced the introduction of composite models (the ability for a user to create a new PBIX file that connects to a golden dataset and then allow the user to extend the model further in their own PBIX file (eg by adding local tables) – this will literally be another game changer, and will finally mean that self service BI and enterprise BI can both live closely together in a world approaching utopia.

Solution Overview and Setup

What is the Golden Dataset?

The Golden Dataset is a master Power BI Data Model that is loaded to PowerBI.com with or without a report attached. (Actually currently you must have at least a single page in your report, even if it is blank, but you can delete it once loaded to PowerBI.com if you like). Once you have your Golden Dataset loaded to PowerBI.com, you can build as many new ‘thin’ reports as you need in Power BI Desktop by connecting Power BI Desktop directly to PowerBI.com with Get Data -> Power BI Service.

The Golden Dataset is a master Power BI Data Model that is loaded to PowerBI.com with or without a report attached. (Actually currently you must have at least a single page in your report, even if it is blank, but you can delete it once loaded to PowerBI.com if you like). Once you have your Golden Dataset loaded to PowerBI.com, you can build as many new ‘thin’ reports as you need in Power BI Desktop by connecting Power BI Desktop directly to PowerBI.com with Get Data -> Power BI Service.

Note:

A Thin Workbook is one that doesn’t contain a data model itself, but links into a central data model (in this case stored on PowerBI.com).

The advantages of taking this approach are:

- You only have one dataset/data model to maintain. This will use less space, use less resources to keep the data up to date, and also eliminate the proliferation of slightly different versions of the data model.

- You can maintain and improve the single data model in the Golden Dataset master PBIX and make the changes available to all users and dependent reports.

- You (and everyone else with access rights) can simply build new ‘thin’ reports connected to the Golden Dataset as needed.

- Anyone building a new report in Desktop using the Golden Dataset will be able to add their own “local” measures – local to their thin workbook.

- With the implementation of a suitable business process, the best local measures created by users can be retrospectively migrated into the Golden Dataset for the benefit of all.

- You get the benefits of an enterprise solution (kinda like using an SSAS Tabular data model) without having to invest in all that infrastructure.

Let’s explore how it works.

Publishing Your Golden Dataset Options

There are actually a few ways you can work with Golden Datasets in Power BI. The first two options below have been discussed previously the last time this article was updated. Option 3, using a Shared Workspace will be discussed in detail below.

Your options are:

- Save your Golden Dataset to OneDrive for Business and import to one or more Workspaces from there.

- Save your Golden Dataset to SharePoint Online and import to one or more Workspaces from there.

- Publish your Golden Dataset to a Power BI Workspace (Golden Workspace), create a new thin workbook pointing to the Golden Dataset, and then publish the thin workbook to a different workspace(s). Power BI manages the link back to the Golden Dataset for you automatically.

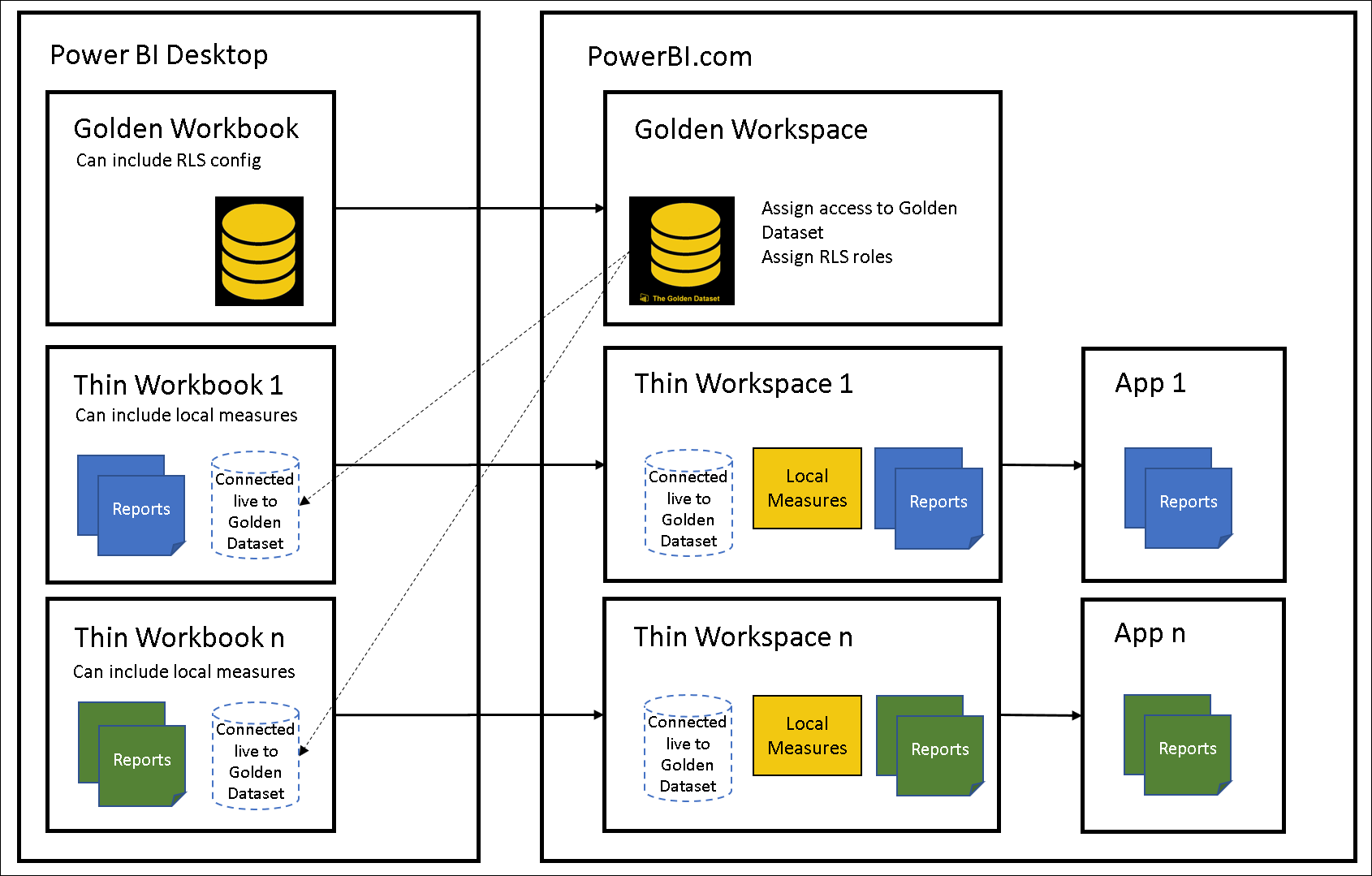

Options 1 and 2 above effectively provide the ability to impact multiple workspaces and reports while maintaining only one dataset, however in order to use the dataset across multiple workspaces, the dataset needs to be loaded multiple times into each workspace where you need the data. This also means that each copy of the dataset needs to have its own refresh schedule configured adding duplicate refresh effort to the systems. The advantage of using option 3 is that you can have multiple workspaces and reports/apps linking back to one common source of data, without the need to duplicate to models. This goes a long way to addressing the restriction that currently exists where only one app can be produced per workspace. The concept of working with a Golden Dataset published into a Golden Workspace is illustrated in the image below.

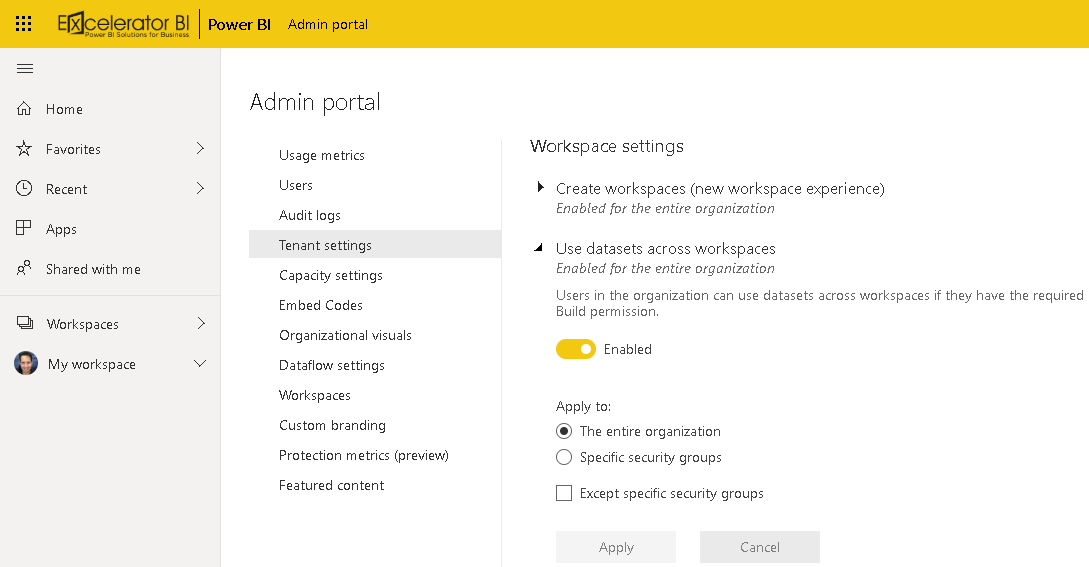

Using Datasets Across Workspaces Configuration

In order to be able to use a common dataset across multiple workspaces, your service tenant needs to be configured to allow it. In the Admin Portal, select Tenant Settings, and scroll down to Workspace Settings. Here you will find an option to Use datasets across workspaces. This can be enabled for either the entire organisation, or specific security groups.

It is also recommended that if you are intending to work with Golden Datasets, you should have a system in place for certifying the datasets so that your report builders know that this is an official data source. A certified dataset is simply a label that confirms this dataset is the real deal. This feature can also be configured in the Admin Portal, Tenant Settings. Now that your workspaces are configured to allow datasets to be used across workspaces, continue to scroll down until you get to Certification. Click to expand, allowing you to enable this setting. Once enabled you have two fields requiring attention. The first field asks you to specify URL for documentation page. This is where you link your dataset certification process protocol, so when a report developer clicks the “Learn More” link in the Endorsement settings of their dataset, this documentation will guide them in the process for requesting certification.

The second field requiring attention is the “Apply to:” field. Here you will allocate the appropriate security group(s) responsible for dataset certification (In this example, I am in the Golden Data Admin group). This field can be applied to the entire organisation, but that seems counter productive considering the purpose of certifying a dataset is to ensure report developers they are working with a controlled and approved dataset. You should therefore only give access to a sub set of users who are allowed to certify datasets.

Now that we have an appropriately configured tenant, we can proceed with setting up the Golden Workspace.

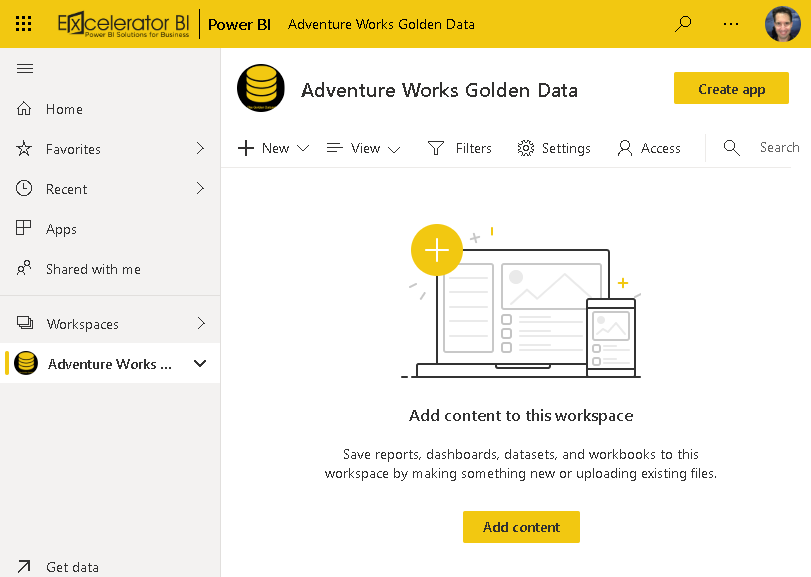

The Golden Workspace

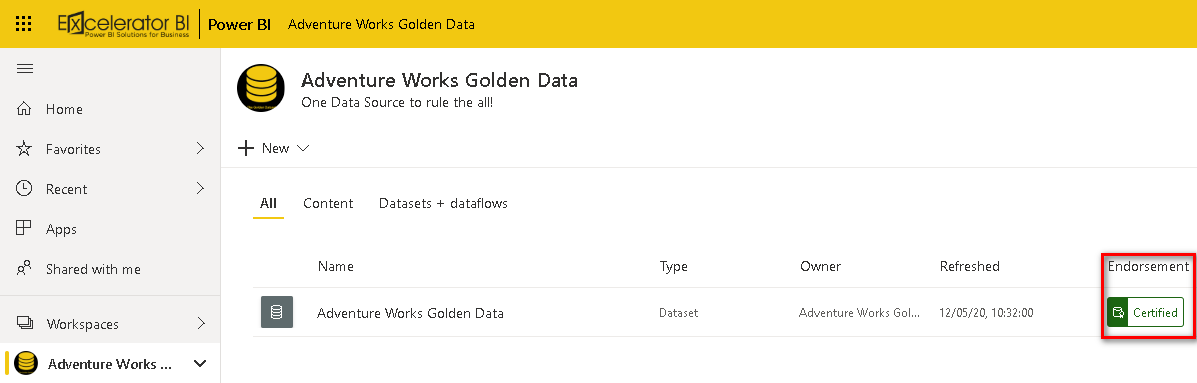

With an appropriately configured tenant, the first step to establishing your Golden Dataset is to create a Golden Workspace for it to reside in. For demo purposes, I am going to create an Adventure Works Golden Data workspace in my PowerBI.com account. This is no different to creating an ordinary workspace as far as creation is concerned, but I would recommend you establish appropriate protocols so that only “Golden Data Admin” security group users create and maintain these workspaces.

In this example, I am using a Golden Dataset logo to visually illustrate that this is a Golden Workspace, with an appropriate workspace name and description. We are now ready to publish our Golden Dataset.

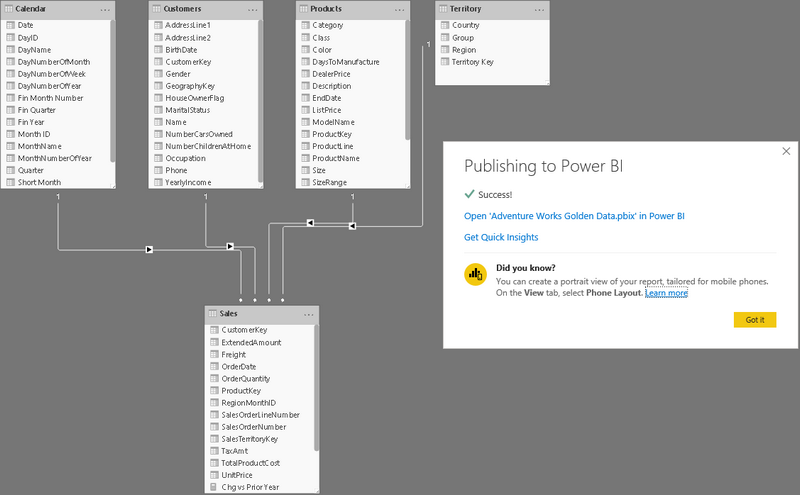

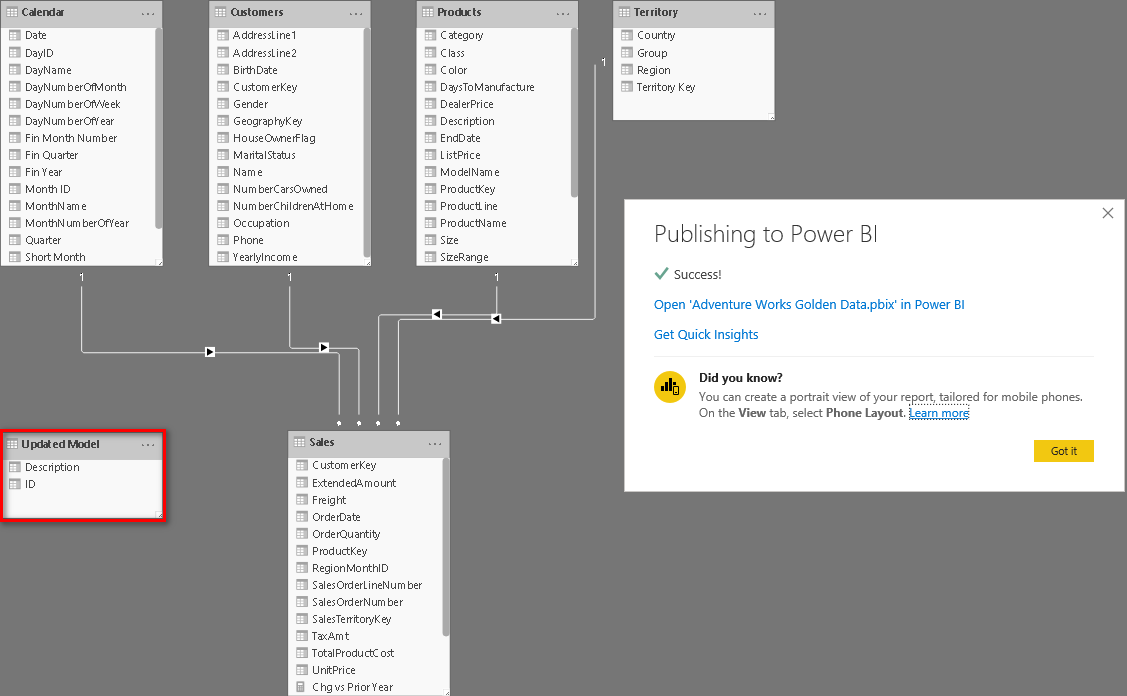

The Golden Dataset

In Power BI Desktop, I have built the master data model for my colleague report developers to use in building their reports. This PBIX file only contains my model (including necessary calculated columns, tables and measures). The report page is blank. I can simply publish this workbook into my new “Adventure Works Golden Data” workspace as I normally would.

Now that I have my Golden Datset in my Golden Workspace, I need to make a few minor adjustments before it is ready for production use. First, I need to consider whether or not to remove the blank report from this workspace (#1 below), as I only want to maintain my Golden Dataset(s) in here (more on this later). Then I need to promote and certify the dataset (#2 below) as being ready for production (I will release an article on certifying a dataset soon, for those of you who are unsure how to do this).

The Golden Dataset is now ready for production.

Making Changes to The Golden Dataset

The real benefits of this approach are realised when you need to make changes to the Golden Dataset. To demonstrate how this works, when I first published the dataset to the Golden Workspace it had 5 tables. You can see there is now an additional table in the model being published to replace the existing dataset in the Golden Workspace.

At this point it is important that I draw your attention to the fact that if you update a Golden Dataset in a Golden Workspace, it does not reset the certification level of the dataset. Depending on the data policies in your organisation this may or may not cause complications. If you do not have the authority to re-certify the updated dataset, you should have protocols in place to get the updated model certified before your publish it to the Golden Workspace and overwrite the approved dataset.

Note: The re-certification process is likely to be addressed in the future once deployment pipelines are made available.

With your updated dataset loaded into your Golden Workspace, you will need to delete the blank report again. This is of course optional, providing everyone who uses the Golden Workspace is aware that it is not intended for building reports in. Another option to consider, is to load a single image into the report page that illustrates the model design (this will make more sense below).

Creating a Thin Power BI Desktop Report

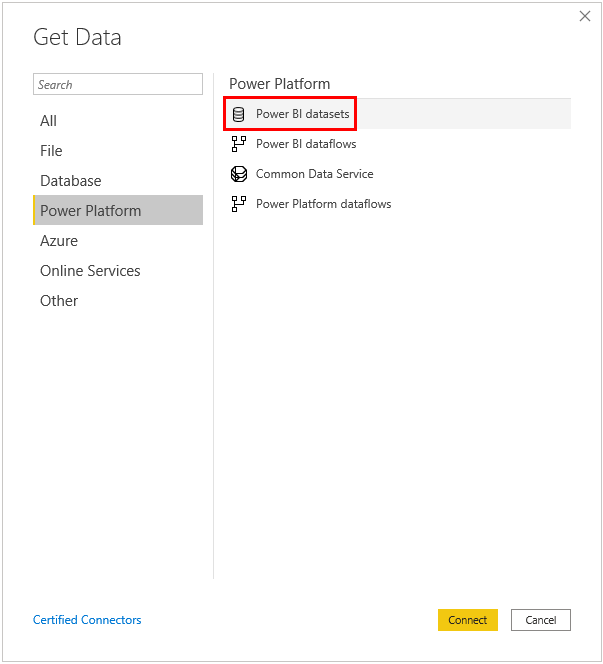

OK. We now have a Golden Dataset in our Golden Workspace, its time to create a new thin report using Power BI Desktop. To do this, simply open a new blank PBIX file in Power BI Desktop, and opt to Get Data when the splash screen welcomes you. Navigate to the Power Platform option and select “Power BI datasets” as the data source (shown below), then click Connect.

If you are not logged in when you do this, you will be prompted to log in before proceeding. Once logged in, you will be presented with a list of datasets that you can access from your various workspaces. This is one reason why having official datasets promoted and certified is recommended, as these will appear at the top of the list, with certified datasets appearing before promoted datasets. Simply select your Golden Dataset and click Create.

After building my report, I saved the Power BI Desktop file with the name “Thin Sales Report”. The new thin workbook does not have a data view or relationship view, only the report view as shown below. Also the thin workbook doesn’t have a copy of the data, so it will only work when you have direct access to PowerBI.com (keep that in mind if you want access when you are out of Internet coverage).

Local Measures

As mentioned earlier, one of the benefits of this approach is that a report builder can connect to the Golden Dataset but still create local measures when needed. Here is my thin Power BI Desktop workbook below (#1 below). I can right click on a table in the Fields List and create a new Measure (#2 below), but notice you can’t create calculated columns or new tables (they are not in the drop down list and are greyed out in the modelling tab), only measures are available (#3 below).

Any new measures you create in a thin workbook are stored as part of that thin workbook and are not visible in the Golden Dataset. If, down the track the Golden Dataset is updated with a measure using the same name as the one you created locally, your local measure will show an error in your thin workbook and the new measure will appear, working in place of your local version.

You will also notice that any ribbon buttons relating to the dataset in your thin workbook are also locked. Among other things, this means you cannot currently add additional data to the model your thin workbook is built on, however there are moves afoot to enable the building of composite models in thin workbooks as shown in the image below.

Below is a screen shot of new composite models coming soon (not available as of this update in April 2020)

.

In this image, we can see a workbook that is connected to 3 different datasets (indicated by the colours, Red, Green and Blue). This is going to be huge! To find out more, watch Christian Wade’s Business Applications Summit presentation on Modern Enterprise BI (The Business Analyst view of the Enterprise Semantic Models begins at ~14mins).

In the meantime, the only advice I can offer in this regard is to add an image of the dataset model into the Page1 report of your Golden Dataset. Then you simply need to connect to PowerBI.com and look at the report attached to the Golden Dataset to understand the underlying data model.

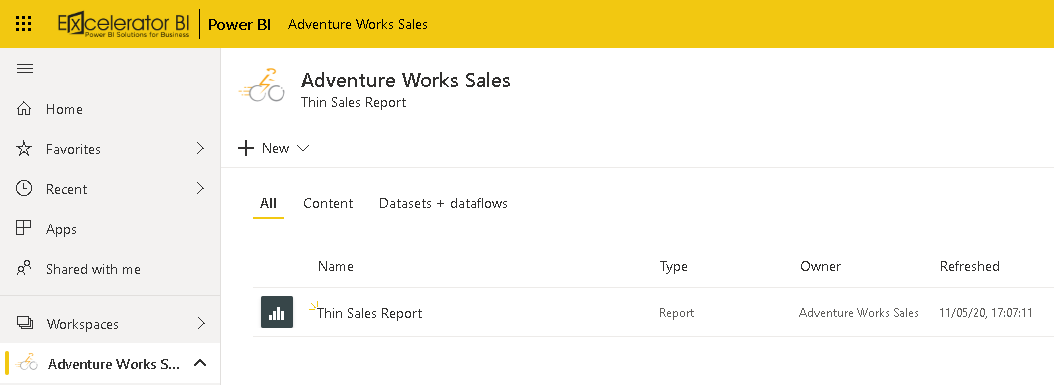

Publishing the Thin Workbook and Workspace Lineage

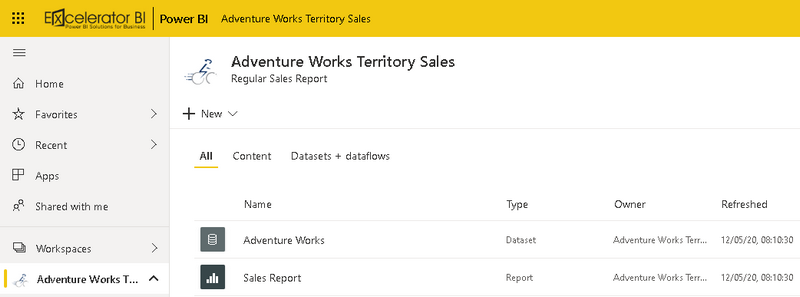

Once you’re ready to publish your thin workbook to PowerBI.com you will normally publish this to another workspace (it can be the same one, but normally it will be different). In this case, I have published my thin workbook into a new workspace called “Adventure Works Sales”. I have also published a regular workbook into a workspace called “Adventure Works Territory Sales” so that we can compare the difference between the two.

Note: I now have both a desktop PBIX file AND a new PowerBI.com thin workbook each containing a report pointing to the same Golden Dataset in the Golden Workspace.

After publishing I can see the new Thin Sales Report (Adventure Works Sales) and Sales Report (Adventure Works Territory Sales) in their respective workspaces.

This is the Thin Workbook

This is the Regular (Golden) Workbook

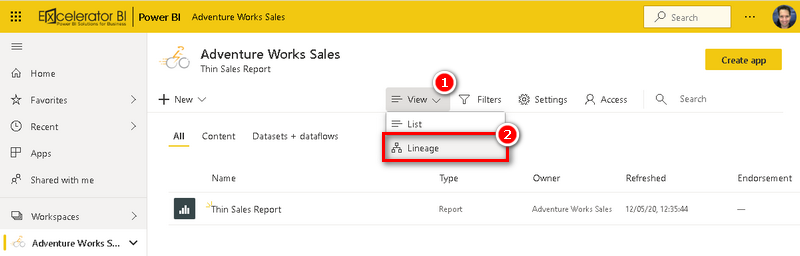

In comparison between the two, there is an obvious absence of a dataset in the Adventure Works Sales workspace, and no I didn’t just photoshop it away. To understand this, let’s explore the new Lineage View offered in the new experience workspaces.

To view your workspace contents in Lineage View, simply expand the View tab (1) and select Lineage (2).

Let’s first look at the lineage of a standard workspace.

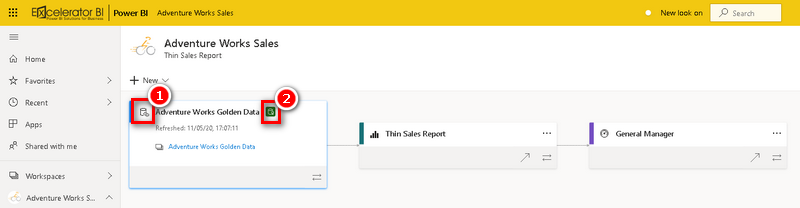

In the Adventure Works Territory Sales workspace, the lineage view reveals that the Sales Report (page 1 with the image of the data model) is in the workbook containing the Adventure Works dataset (golden) which is connected to the 5 Tables in Excel data source via a Gateway.

In contrast, the lineage of the Thin Adventure Works Sales workspace containing the thin report, reveals a General Manager dashboard that stems from the Thin Sales Report, which is connected to the Adventure Works Golden Data in another workspace. Looking at the image below we can see two significant flags relating to the Adventure Works Golden Data. The icon for this dataset contains a link in the bottom right hand corner (1), letting you know this is a shared dataset. The green icon to the right of the dataset name reveals that this is a certified dataset (2).

To explore the source of your Golden Dataset, simply click on the dataset name or on the hyperlink provided (see above). This will redirect you to the lineage view of the shared Golden Workspace.

The lineage of the Adventure Works Golden Data workspace reveals that there is an Adventure Works Golden Data report (containing only our data model image mentioned earlier) stemming from the Adventure Works Golden Data dataset, which is connected to the 5 Tables in Excel data source via a Gateway. In this view there is also a small icon immediately to the left of the “Show Lineage” icon that reveals the dataset’s impact across workspaces (1).

Understanding Impact Analysis

Note: Before proceeding in this next section, I created a second workspace and uploaded another thin workbook connected to the Golden Dataset, so that I could illustrate the Golden Dataset’s impact across multiple workspaces more effectively.

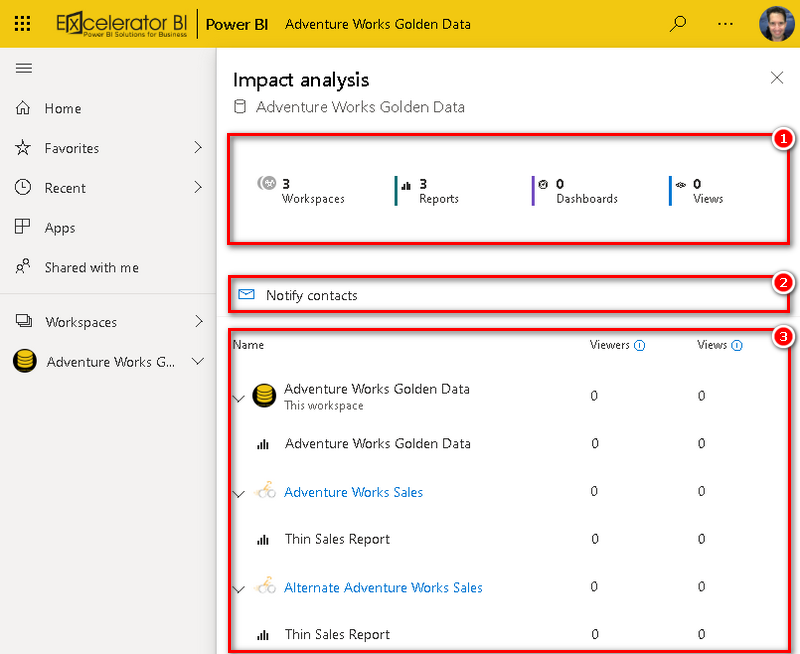

To open the impact analysis report, I clicked on the link (shown as 1 in the image above). The Impact Analysis report is an important feature to allow you see what will be effected when you make changes to your Golden Dataset.

Exploring the Impact Analysis pop-out shows you how many workspaces, reports, and dashboards will be affected by your change, and provides easy navigation to those workspaces where the affected reports and dashboards are located so that you can investigate further if required.

The Impact Summary (1) shows you how many workspaces, reports and dashboards and the number of views there are on those potentially affected items. This helps you determine the overall impact of the change for downstream objects. Thus, it is probably more important to investigate the effect of a change to a dataset that has 500 views than on a dataset that has 10 views.

The Notify Contacts link (2) opens a dialog where you can create and send a message about any dataset changes you intend to make to all the contacts for all impacted workspaces, including workspaces you don’t have access to.

Finally, the Usage Breakdown (3) shows you, for each workspace, the total number of distinct viewers and views for the potentially impacted reports and dashboards it contains, and allows you to further break that down for each report and dashboard.

Power BI Online Only Reports

Of course you can use the Golden Dataset to create a PowerBI.com service only report (as you can with any dataset), however to maintain the effect of the shared Golden Workspace, we need to do this in a slightly different way.

When working in Adventure Works Territory Sales, a regular workspace, we simply need to click on the Dataset in the navigation pane (1) and we can build our new report (2) and save it into the reports area of the workspace. After the report has been saved (top right hand corner of PowerBI.com) you will see the new report in the Reports list in the navigation pane (3).

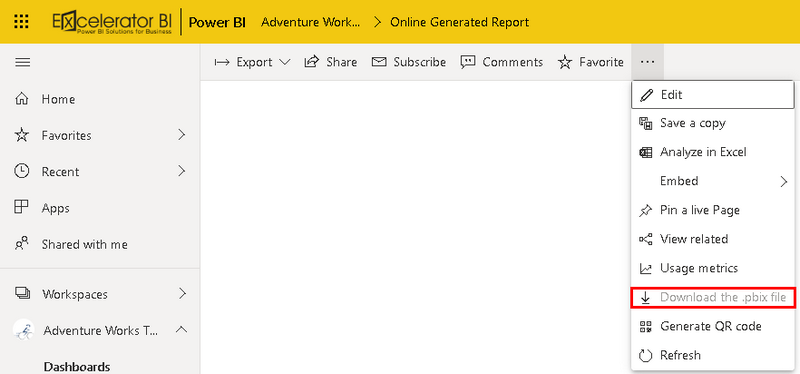

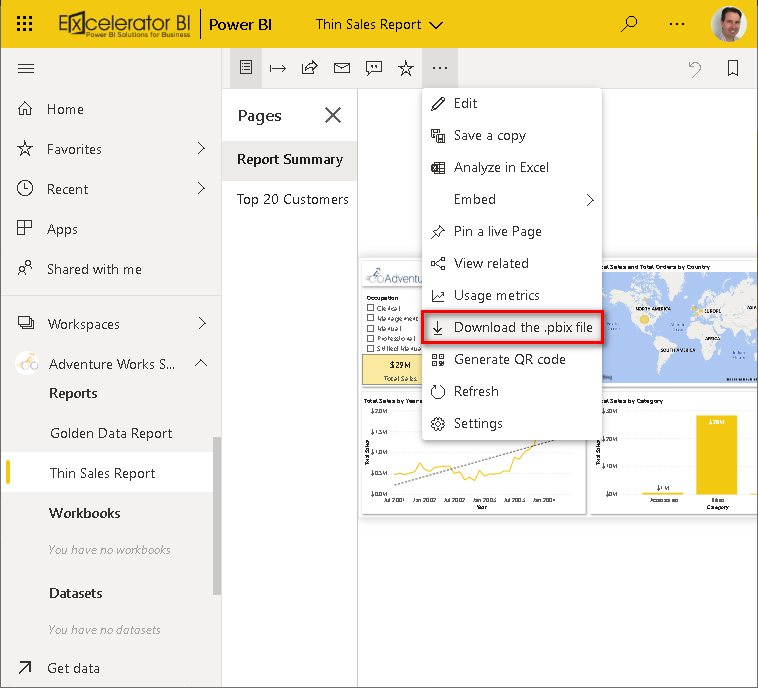

If required, you can download a desktop version of this report by clicking Download the .pbix file as shown below. Which will download both a copy of the report and a copy of the underlying dataset associated with that report. But what about when we’re working in thin workspaces?

To illistrate, I have used our training profiles below.

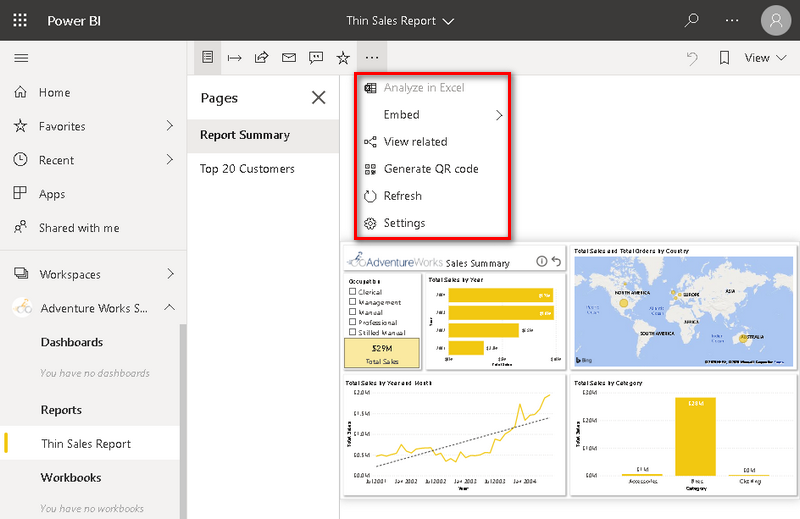

Looking at the Adventure Works Sales thin workspace, we don’t have any datasets available to build reports on, and our collaborators who have appropriate access to build reports in the Adventure Works Sales shared workspace cannot currently make any adjustments to the Thin Sales Report.

The solution to this is to ensure your collaborators are given build access rights on the Golden Dataset.

Even if you wish to keep your collaborators access to the Golden Dataset limited to viewer status, with build enabled, they will be able to edit and modify reports in other workspaces that use the Golden Dataset.

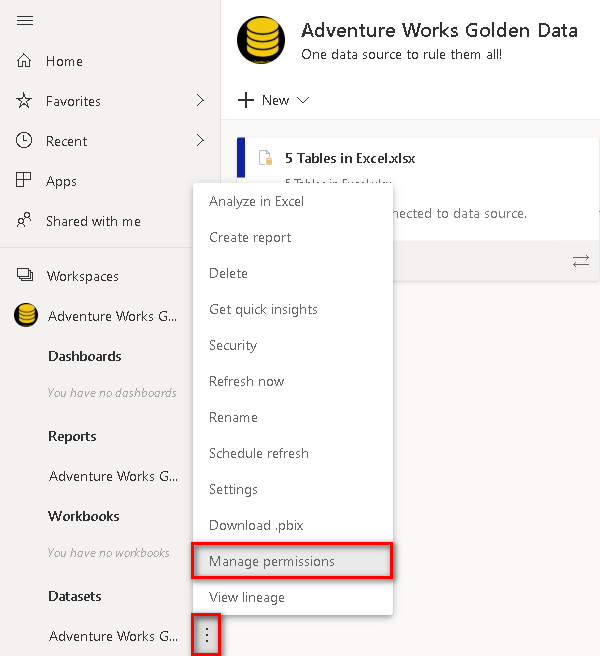

The first step to enable this is for you as the administrator of the Golden Dataset, to click on the elipsis next to the dataset name, and select Manage Permissions.

You can now assign build rights to those users you wish to give editing rights to in the Golden Workspace. Simply click the elipsis next to the user’s permission level, and select Add Build.

Now that I have given my collaborator build access to my Golden Dataset, how will this impact their access in the Golden Dataset Workspace?

As a viewer of the Golden Dataset, the content available to your collaborators doesn’t change in the Golden Workspace. Here, they will still only have access to view that “Page1” report that was published alongside the Golden Dataset.

When my collaborator views the Adventure Works Sales workspace however, they are now able to modify the existing Thin Sales Report or Save a Copy and start building a new online only version.

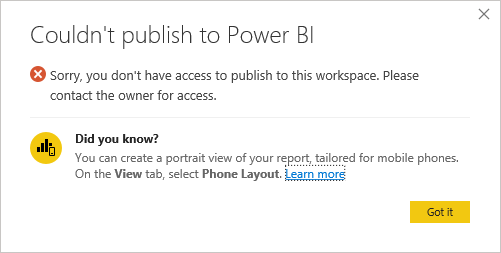

With build authority granted to my collaborator they are also now able to connect to the Golden Dataset in Power BI Desktop, and build a new thin report that they can publish up to the shared Adventure Works Sales shared workspace, but the Golden Workspace is protected from them accidentally publishing a new workbook into it.

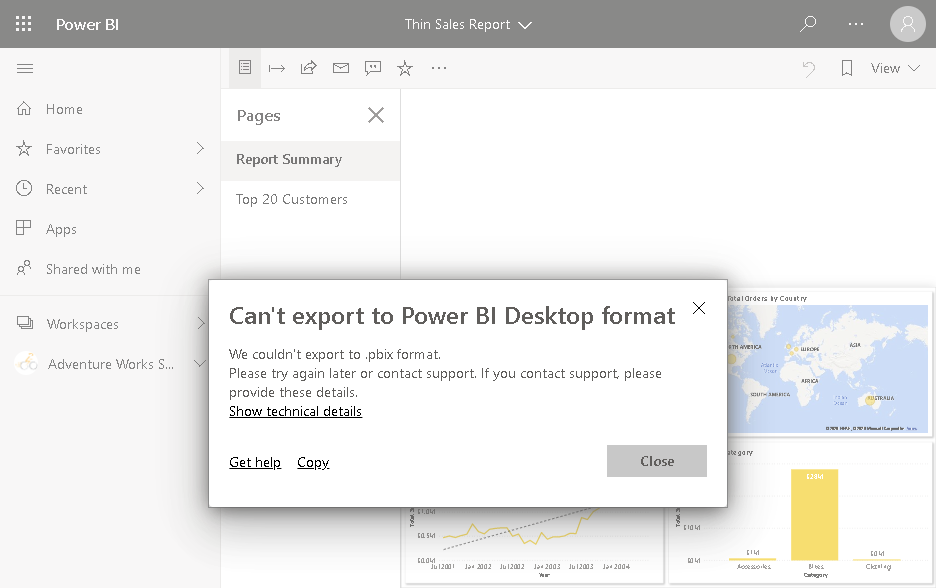

Some of you may have noticed that the Download the .pbix file is once again available to my collaborator, however rest assured that attempting to download a PBIX copy of the report is not enabled.

As the administrator of both the Golden Workspace and the shared workspace however, I am able to download the updated Thin Sales Report.pbix file once changes have been applied, should I have the need, however I am not able to download any new online generated reports added to the shared workspace created by either myself or a collaborator using the Golden Dataset (Golden Data Report below).

Switch Reports to a Different Golden Dataset

Now for some really good news. It is possible to repoint an existing thin Power BI Desktop workbook to a different dataset if you so required. Now of course you wouldn’t want to repoint a Sales report to a Finance dataset, but if you take this Golden Dataset approach and later change your mind which workspace to point to, you can easily repoint the thin workbook to a new dataset.

“But all the Data buttons on my ribbon are locked out!” I hear you cry. That is only half true.

While previously you may have taken an approach of repointing the data source via right clicking a table in the fields pane and editing the data acquisition query, this is not available to us in a thin workbook.

Instead, we need to look to the “locked out” ribbon to achieve this.

It is true that most of the Data buttons are locked out in the ribbon, however there is one that still has limited functionality in a thin workbook: Transform data. While the top Transform Data button is greyed out and unavailable, the bottom Transform data button (1) can still be interacted with, and offers only a solution to adjusting Data source settings (2).

Clicking on the Data source settings returns the Power BI datasets picker to view, where you can select a new remote dataset. Note that you cannot change the data connection type however, so you are still limited to another source of data present on PowerBI.com.

Summary of Key Points

- You can upload a PBIX file to the service and use it as a Golden Dataset (one dataset to rule them all).

- You can also set up a Golden Workspace to hold your Golden Dataset(s) that you can then link many reports and workspaces to the Golden Dataset.

- You can create as many new reports as you want remotely connected to this Golden Dataset.

- Connect a new blank Power BI Desktop file effectively creating new thin workbooks.

- Indirectly create new reports online in the Power BI Service.

- If you create a thin workbook connected to the Golden Dataset and create a report, you can publish it to a different workspace and it will work.

- Anyone with build access to the Golden Dataset and a local copy of the desktop generated thin report (PBIX file) can work with the file on their desktop and successfully interact with it on their Power BI Desktop. If you later remove their build rights to the dataset, then the local thin workbook (PBIX file) will stop working because it can no longer access the Golden Dataset – very cool!

- Online generated thin workbooks can’t be downloaded.

- You can modify and update your Golden Dataset and republish at any time, and the changes instantly affect any thin workbooks that you have created connected to the Golden Dataset, and you have the Impact Analysis tool to help you manage this –sweet again.

- Any user with access rights can build local measures to a thin Power BI Desktop workbook without impacting the Golden Dataset.

- With a suitable business process, end users can advise the owner (admin) of the Golden Dataset of any useful local measures that have been created. The administrator can then migrate these measures to the Golden Dataset for the benefit of everyone, a task made simpler when you copy the measures between two PBIX files with Tabular Editor.

- If needed, you can repoint any thin workbook to a different copy of the Golden Dataset. This can be good if you started your journey without using a Golden Dataset but now want to backtrack and sort this out.

Let me know what you think of sharing Power BI Golden Datasets via Golden Workspaces in the comments below. I also would love to hear any other ideas you have on how to get the most from the Golden Dataset.

Hi Matt,

Is it possible to replicate or mimic Power BI Report Server folder and subfolder structures (for report organization) in Power BI Service?

Thank you

Hi Matt,

Thanks for the elaborate explanation.

We recently ran into a very tricky issue but couldn’t work it out.

We have the golden dataset in a PPU workspace. We then built different reports on another PPU workspace pointing to the golden dataset and published them to the App. Users have pro license and we gave them viewer and build permission under the golden dataset and viewer permission under the workspace that hosts the reports. However, users still received the prompt that they don’t have access to the underlying dataset.

I wonder if this has anything to do with PPU workspace? Appreciated if you could please shed lights on this.

Cecilia

Yes. As it’s name suggests, premium per user is pay “per user”. If you build in PPU, then everyone consuming it must have PPU. Otherwise no one would pay $5,000 per month for full premium. You must have full premium if you want free/pro users to consume the premium content.

Hi Matt

My question or scenario is around, if I had a team of developers working on their own versions of this report and the end goal in the is to stitch them all together into one PBIX file using a shared power BI data set. My question is are there any potholes that should be expected when it comes time using a Shared Power BI data set and copy pasting it all into one PBIX file.

Bear in mind those developers, do their own calculated measures in their thin reports. The only pothole I see is how would I go about by bulk uploading the 15 – 20 measures into the Shared data set here? Am I able to use the ALM tool kit here so that all the measures get pushed eventually back into the Shared data set and then we can eventually do the stitching of all the different reports into one?

Thanks for your time and this amazing thorough article.

This is not really a substitute for a good code source control tool, particularly if you have multiple people working on it at once. Consider

1. SharePoint (or equivalent) with check in/check out. There could be some duplication.

2. look into ALM toolkit and see if that can help http://alm-toolkit.com/

Thanks for this article. Very informative.

I’m wondering about the build capacity in relation to RLS, CLS, and TLS. Is it possible to give a developer build access to a golden model, but to restrict access to specific rows, columns, and tables even with that build capacity? Thanks!

Great Article Matt!

A quick question, I have a need to create one Golden dataset for the Globe and multiple reports for several countries. The UI of the workbook for each country is different but the underlying data structure will be same. Is there a way to limit the data based on country field, while creating workbooks for each countries ?

RLS on golden dataset may not be an option, as there will a need for some users to have access to multiple country reports.

At the moment I am thinking towards the parameters, but do advise if there is any better approach.

RLS is the solution. If some countries need access to multiple country data, then simply give them that access via RLS

Hi Matt, many thanks for the article. I’m new to the concept of the golden dataset – so is the idea to have literally all the business’ data tables within one model – a single golden dataset? Or would you have say a ‘Finance’ golden dataset, an ‘Operations’ golden dataset, etc?

Hi Joe,

Generally speaking, I would have separate datasets.

The idea of the Golden Dataset(s) is that the one dataset is used for every report that taps into the same information.

For example, you might build a dataset for your OH&S manager that reports on organizational safety. You might also have a report for your Environment Impact Manager that, in part, taps into the same dataset to report on the Environment related Safety issues.

The 2 different reports access the same Golden Dataset, ensuring both managers are getting the same information relating to environmental safety issues.

This is such a great article! Thank you Matt. I do have an important question though – how can you handle the deployment process to higher environments with this approach and deployment pipelines in a premium capacity? Can you? Ideally, I would have my DEVELOPMENT thin reports connected to my DEVELOPMENT golden dataset, and then my PRODUCTION thin reports connected to my PRODUCTION golden dataset. And then normally with deployment pipelines I would handle this environment promotion and dataset connection using parameters or data source rules, but it doesn’t look like this option is available with a live connection to datatsets. How would you handle this?

Hi Jared. I am only getting to this now (I had some time off). You are right – this is the “non-premium” version. In fact I first wrote this article before Premium and Pipelines were even available. I have not done any work work with Pipelines, so I am not in a position to recommend or comment. I do know that parameters exist, but I can’t say any more. Sorry.

Hi Matt, great article. Thank you. I would like to know your approach on one use case in my project.

We have created common master data set (golden) which resides in separate workspace (premium) and we are live connecting to that master dataset to create report.

But in this approach person connected live to DS can not transform data and publish. Can we create some intermediate way to access data and transform without touching Master data set ?

We want to enable report creators to transform data locally for them and not update master data frequently. But also want to maintain separate DS and Report workspaces connecting via live connection.

Note : Data set and report are published in separate premium workspaces.

Thanks

Siddarth

Well, you can build a composite model in Power BI Desktop. Connect to the live dataset and extend the model with new local tables and local measures. You cannot modify tables that are already loaded, however. One thing you can investigate is creating dataflows for some of your table objects, and let your users load those locally in their thin workbooks.

Thanks Matt,

I have already tried composite model and like you said we can not modify already loaded tables which is actual requirement. I will look into dataflows option.

Thanks.

Hello Matt,

Is it possible to achieve same “single version of truth” just using Excel 365 and Sharepoint Online ? We don’t use PowerBI just reports created on top of Excel data models which duplicate data model with every new excel file (report) ?

I was about to say “no”, but I need to change it to “I don’t remember”. It’s such a long time ago now when I last thought about this – maybe 5 years. In the past, you could certainly load power pivot workbooks to SharePoint Enterprise Edition and they would work online. I simply cannot recall if you could create a thin workbook from it. There must have been something, because I spoke to MS engineers about the possibility of editing the connection string of a power pivot workbook and pointing it to another file (it’s greyed out by default). I just can’t recall the details.

Sorry, that’s all I can tell you

Hi Matt,

Is there a way to do a stress test (aka environment shakeout) of Power BI Golden Dataset created in Premium node?

The objective of this test is to see how many reports or dashboard this can handle and what is the response time?

Thank you

I don’t know of any specific tools, but I guess you could use automation tools to hit the server all at once. There are very good performance reporting tools in the service, and also you can now allow auto scale up to a higher node if it hits capacity (I seem to remember).

Hi Matt,

Great article thanks, we are well on our way down the Golden Dataset/Thin report road. 2 quick questions I hope you could help with.

1. When repointing a thin report at a different city of the Golden Dataset, the thin report seems to drop any local formatting. i.e a locally formatted number, formatted to 2 decimal places resorts to about 10 decimal places when I repoint. Do you have any advice?

2. We are using composite models and making use of calculated columns in the thin report, this requires a local copy of the model on the thin report. When I publish the report I can’t find a way to schedule refresh the local model dataset that appears in the service. I can manually refresh but not schedule. Any advice?

Regards, Steven

Regarding 1, I assume you are referring to different users with different locale settings/regions. I would expect it to work. If not, I suggest you log a bug with Microsoft and see what they say.

Regarding 2, does the user publishing the thin report have authority to use any gateway, etc? I assume so, because you said they can manually refresh. Are you sure that the thin workbook is not being updated when the main report is refreshed? I would check and confirm that first. If it is not being updated, I would log a support call for that too.

Hi Matt,

It should be a quick question.

Is it a good idea to have separate Golden Dataset for each environment like Dev, Test, UAT and Production? Especially when working on a P1 or P2 capacity?

Thanks

Premium provides a multi tiered architecture to load up Dev, then promote through Test, UAT and then prod. If you want this feature, then it works well and you can use it. If you don’t have premium, you can simulate this architecture by using Desktop as your Dev, and then manually promoting into workspaces for Test and Prod. If you don’t want/need such overhead (think self service BI), then you can just use Desktop for dev, skip test and use the workspace/app for Prod. Which approach you use depends on your needs. My view is one is not better than another, just different.

Thank you Matt.

Hi Matt,

I successfully created a Golden Dataset in my organisation. This composite model comprises of 1 fact and 15 dimensions with over 150 million records in fact.

Question for you, is there a way to determine how many aggregated tables should be created so maximum queries are serviced within Power BI and do not escape to data source? Creating separate aggregated table for each report does not sounds a good practice.

Any suggestion would be highly appreciated.

Thank you

There is no simple answer here – it depends on the circumstances. One thing for sure, you don’t want to create an agg table for each report. If you do that, you may as well use SSRS and SQL Server. My general advice is to monitor the queries going to the DB (us SQL Profiler, I guess), and work out what queries are being sent to the DB. I also suggest looking at anything by Phil Seamark on the topic. You could start here https://dax.tips/category/aggregations/

Hi Matt,

I really appreciate your time and advice.

Thank you

Thanks for this.

You mentioned a future post about the Golden Dataset concept and how to incorporate deployment pipelines. Have you posted on this or still pending?

Yeah, sorry – I haven’t got back to it, and can’t see it happening any time soon. Sorry for promising and not delivering

Hi Andrew,

I have used pipelines with the Golden dataset using inbuilt deployment rules to change data source between environments (Dev/Test/Prod). This worked fine however the problem I face is doing the same with regards to the thin report. I can do it manually however that means the pipelines only work for the datasets which is this case rarely change. I am interested to know if any thing has changed or anyone has found a work around.

I don’t have premium so I haven’t seen this, but I understand the problem. I suggest you search for an idea at ideas.powerbi.com and vote for it, or create one if there is none. As a work around, you could investigate using OneDrive to deploy our thin workbooks meaning you don’t have to publish them (just save them). Out of interest, what is the process you need to follow with the thin workbooks that is not supported by pipelines?

Outstanding post – thanks!

This is one of the best overviews for this concept! Thank you.

Hi Matt,

I noticed, you did not mention aggregated tables in this article.

If I’m developing 20 reports from a Golden Dataset and each report requires a separate aggregation (or aggregated table). What is the best way (or place) to create aggregated tables for each report? Is it within Golden Dataset pbix file or different?

Thank you

I’m not sure what you mean by “a separate aggregation (or aggregated table)”. Do you mean you have a calculated table as part of the report? If so, my generic approach is to not use calculated tables. This approach works, but it duplicates the data. Often (mostly) this is not required as the problem can be solved in other ways. It is however quite common for people with a SQL background to use calculated tables as part of a solution.

Hello Matt,

Thank you for this article clearly describing the processes and impacts.

I still have a small practical question…

Let’s say I have different Golden DataSet with data I want to isolate.

I’m talking about users reading reports, not those who publish. User A can read Dataset X and B can read Y.

I will publish my 2 Thin reports in 2 different workspaces.

However, is it possible to centralize my Golden DataSet in 1 single Golden Workspace, giving READ rights to users A and B, without A seeing the dataset Y and B seeing the X?

I must normally give Viewer rights to A and B on the Golden Workspace (in addition to the Workspace containing the Thin reports) so that they can each read their report using the published Golden dataset.

Answer to myself :

This is possible without giving any rights on the Workspace, but by managing read permissions on individual GOlden datasets in Golden Workspace.

Hi Matt

Great article! Any update when the composite features will be released? We have a golden dataset but are constantly having to add tables that are only used in one report. This feature would be great to help declutter our dataset.

Thanks!

Sorry, i don’t know. Microsoft never gives hard dates as things can and do go wrong. According to the release plans, it should be Nov, but who knows. https://docs.microsoft.com/en-us/power-platform-release-plan/2020wave2/power-bi/direct-query-over-power-bi-azure-analysis-services

Hi Matt

Thank you for this article. It is relevant for many issues I encounter in my BI life !

Unfortunately my company uses Power BI Report Server and not powerbi.com 🙁

Any chance to adapt the golden dataset concept and methods to Report Server ?

Thank you !

Sorry Mark, I don’t know enough about Report Server to comment. There are new updates coming for composite models anytime now. On e they flow into RS, I think you will be able to create composite models there too. Sorry I can’t help more

As a newbie to Power BI, this article is a game-changer for me, and comes just in time as I wrangle with how to structure everything. Thank you!

Hi Matt,

This is an excellent article, many thanks. I’m *about* to implement this approach but am starting with a pbix file that already contains my data model and some reports. Although I can easily delete the reports to create the file with the Golden Dataset, can I avoid having to re-create the reports in a Thin Workbook.

It’s like I want to take a copy of my original pbix but then get those reports to ‘link’ to the Golden Dataset and then discard that pbix’s data model. Or, just transfer the reports to the Thin Workbook pbix. Are either of these possible?

Thanks

Yes you can do it. Make 2 copies (keep the main as backup). Delete reports in one and publish as the gds. in the other, keep all the reports and delete all the tables. Everything will break, but don’t worry. Then get data from the gds, and you are done.

Hi Matt,

Excellent tutorial on Power BI Golden Dataset.

Can we say, that we can replace the SSAS with Power BI Golden Dataset approach or SSAS is an old way of BI?

Thank you

You “can” if you want to, but this approach does not make SSAS the old way. SSAS has a place with enterprise BI. If you want to do it this “new” way, and it does what you need (without needing to set up a server, etc), then well and good.

Hai Matt, great article, clearly explained.

We have multiple user groups. Each user group has its own focus, its own data. But connect to al those different area’s is always the dimension ‘payment agreements’.

Reading your article I suppose we could have a golden dataset for each user group, consisting of a fact table with dimensions.

When the dimension ‘payment agreements’ is attached to every golden/shared dataset, does this mean it has to be refreshed multiple times? Or is there a way so we only have to refresh that diimension of 15 million rows once?

Regards

Ron

sounds to me like you should be considering role level security. You create 1 model with all the data. You create a user access table containing the login credentials (email) and which data they can see. When they login, they can only see their own data. Search for Role Level Security and look from anything by Guy in a Cube

Great article. One question, how do you create these reports from the golden data set when you only have guest permission to the tenant. I’m working on something like this for a client but am struggling to access the data set as it is not my native tenant. I’ve had a look on the Microsoft help but to no avail.

Wow! this is amazing article. I do appreciate you for spending hours and collecting all needed information to teach us.

I wondering how efficient those golden datasets versus the direct connection to SSAS. I noticed the connection to dataset is very slow. Thank you

Ahmed Daffaie

A Power BI Dataset is actually SSAS Tabular in the cloud. I see no reason why performance would be different to an equivalent direct connection to SSAS. The differences can only be cloud latency different spec’d server (which ironically is more likely if your dataset is in premium capacity vs shared public cloud). If the connection is very slow, then maybe there is something wrong with the design of the dataset – it is hard to say.

Matt this is an excellent article – haven’t found anything else as in-depth.

How are people getting around the fact that for measures, all the report builder has in the Shared Dataset is the measure name? Is there a way to give e.g. a tooltip description?

Composite models I think will be really when this starts to spread – at the moment I’d love to be able to give someone a Shared Dataset, but invariably I’ll have them complaining why they can’t add an Excel file or SharePoint list to the data model themselves.

Also another question – if I publish to Workspace B using Golden Master from Workspace A, and then include that report to my Workspace B App, for everyone permissioned to that app to see it do they need permissions against the Golden Master in Workspace A?

My understanding is yes, but I would love to hear from anyone that has tested this and can confirm either way.

Jay. this is now fixed with the June 2020 release of Desktop. The model view is now visible, and you can manage the local measures from there.

Hi Matt,

Great article. I have come back to this many times over the years and I think I finally found an use case for it at the company I am right now.

..It’s a bit more complicated though:

The issue has to do with size and data governance. Our sales golden dataset for example is huge (many dimensions, many columns). But, our sales guys need only 10% of the columns/fields so they can quicker understand and work with the dataset, where other users need like 60% of the fields from the dataset to make analysis on other subjects.

So my question would be:

Can you create a child golden dataset which is connected to the golden dataset (where you hide fields for example, or load less data in)? This child golden dataset would then be the source for the sales guys to make reports on, and another child golden dataset would be the data source for other users to connect to and make reports on. Would this be possible and maybe even more important would you consider this way to be practice in this scenario?

No, I this is not possible. But I suggest you make a comment on the June 2020 blog update https://powerbi.microsoft.com/en-us/blog/power-bi-desktop-june-2020-feature-summary/

There are 3 common ways to give feedback to Microsoft about Power BI.

issues.powerbi.com

ideas.powerbi.com

and the blog.

The good thing about giving feedback via the blog about a feature in the current release is that it gets seen, and doesn’t slip through the cracks like a new idea with just 1 vote. The model view in a thin workbook is new as of June 2020 and it is in preview. If you give feedback now, you may get some attention.

We have copies of reports from Golden Datasets, but when the original report is changed, the others coming off that aren’t. It would be great if the copied reports could just refer to the original (and so change upon report update/changes), rather than being one-time copies.

Something sounds wrong. You only maintain 1 Golden Dataset. All other reports should be “thin workbooks”. Thin workbooks don’t have their own dataset, but instead use the Golden Dataset. All changes in the Golden Dataset should be available in any thin workbook.

That’s right – it is more about the report changes, really – keeping the copied report the same as the new one (that is in the same workspace as the GD).

Why do you have copies of the same report? If the 2 files have the same reports in the same dataset, why not just use one?

Two separate apps (for different audiences), but they have ended up with the same kind of report in each. The original is published to workspace A, say (that obviously includes the dataset), but then the app for workspace B has an out-of-date version of the report, so I copy over from A. The dataset remains in A only.

If the reports are the same, and if there are constant changes, I would consider using the original concept of the Golden Dataset. Have 1 report, save it to OneDrive and import it into 2 different workspaces. Both workspaces will have a copy of the dataset and the report, and each dataset will need to be refreshed, but you get to reuse everything. Alternatively, you could use a single App and use Role Level Security to control what people see. https://www.fourmoo.com/2020/05/26/did-you-know-you-can-now-selectively-show-report-pages-for-specific-users-in-power-bi/

Great article Matt.

I am starting to get into using Incremental Refresh. While the service may have accrued 5 years of data, my desktop pbix only gets a couple weeks of data. This makes it easy to modify the report without the pain of queries pulling 5 years of data. Problem is that when I publish, it overwrites my 5 years with 2 weeks of data. I’m trying the ALM Toolkit but haven’t found a way to publish the report but leave the dataset (5yr) alone.

Your article here makes me wonder if I should use the golden dataset instead. My question is, is there a way to connect to the golden but have desktop just get the last couple weeks to keep development manageable, but when I publish, open the door again?

ALM Toolkit will do what you want, but only for Power BI Premium (currently). Deployment pipelines are very close to being released (Microsoft announced this at MBAS – you can watch the Christian Wade Enterprise video to see it In action) I saw a blog post recently from Chris Webb showing how to solve this problem with deployment pipelines. https://blog.crossjoin.co.uk/2020/05/24/limit-the-amount-of-data-you-work-with-in-power-bi-desktop-using-parameters-and-deployment-pipelines/

Trying to set up a Golden Dataset, but in desktop my “Get Data” -> Power Platyform only has 3 options. It does not show an option for “Power BI Datasets”.

I don’t know what you mean by “Power Platyform”. I assume you are trying to do this from Power BI Desktop. What version are you using?

Great post Matt. The concept of a golden dataset and publishing reports across workspaces is very powerful indeed.

I implemented something very similar but running into some problems. In my case the Golden Dataset has RLS and is published to the Golden Workspace and a Thin Report 1 is created from this Golden Dataset and published to the same workspace and published as an app. All well and good.

I have a Thin Report 2 which connects to this Golden Dataset and is published to another workspace and distributed as an app. But the users cannot view the report as it says permission is required to access the report. I have tried everything, I have given users permission on the Golden Dataset app as well and they are assigned roles in RLS but still the same issue. Both the workspaces have been upgraded to the modern workspace. Any ideas ?

Mmmm, tough. There are lots of touch points. I believe it should work but I can’t say I have actually configured this. Do the users have access to the Golden Workspace? And the Golden Dataset? These are both different to the RLS settings

The users dont have access to the Golden Workspace but they do have access to the app and hence I would have thought they had access to the Golden Dataset. I thought that should have been sufficient. Thanks for your reply, its got me thinking in trying out a few options.

Hi Matt,

Interesting approach. We’re tring to move our set-up to something similar, but are running into two issues: we wan’t our users to be able to download the .pbix file of a report in a thin workspace that was built on a dataset in the “golden workspace”, and we also want them to be ably to access “Analyse in Excel” through the app. Both options are unavailable however, even when the users have build permissions on the dataset. Do you have any suggestions as how to fix this? Are there any settings that control this behaviour?

Thanks in advance!

Best,

Eva

If you add the “build” permission on the golden dataset to the user/group that has viewer access to the workspace, then they can built a “online thin report” in any v2 workspace or desktop without the workaround you outlined.

To do this: On the dataset, click the three dots, select “Manage Permissions”. Then on the user or group line, click the three dots on the right and then select “Add Build”.

Thanks for your suggestion. This is a better way of managing the online report generation with workspace collaborators. I have updated the post.

Well, you have your certified Enterprise Golden Dataset (one version of the truth) used by hundreds of thin reports, related dashboards, and thousands of users. Impact analysis will give you these figures (including views), but it won’t tell you how many sophisticated thin reports might become unusable or wrong due to a potential change of the underlying Golden Dataset. Of course, you can inform all report authors of the upcoming change, the impact of which will be immediate, but that’s a bit like changing the company name with short notice. You don’t want to do that regularly. Thus, again business users will have to wait for IT to make required changes to the data model.

Hi – can you do separate calculated tables off the golden dataset?

Do you mean in a thin workbook? Currently no, but it is likely to work when composite models are released in the coming months.

Wouldn’t we take a hit on refresh performance using this method? I have several data sources that are required across multiple report, and each report also has a set of additional data sources unique to that report. So if I wanted to use this technique, I would have to combine all the data sources into a single file, even those not required, since a report can only connect to a single data source when using this shared dataset method, correct?

So now with my previous 4-5 datasets that take anywhere from 5-25mins to refresh individually, wouldn’t that now make this single golden dataset’s refresh time, take hours rather than minutes?

There is a refresh hit – each copy needs to be refreshed, but this is not a “performance” issue per se. And this approach you cannot have different data sources, and hence yes, you would need them all in one. As I have mentioned in the comments below, I am in the process of updating this article. Check back in a week or so for the latest advice.

Looking forward to it. Thank you!

Very good Matt, have you considered using Data Flows in this process in some way?

Jason and I are currently updating this blog. It will be updated shortly (maybe even next week). Yes you can use Dataflows but that just gives you access to the tables, not the model. The best way to do this now is using shared datasets (watch for the updated blog)

I just came across this article after creating a dozen reports off of the same dataset. I would like to transition everything to the golden dataset idea, but I have a Question.

My golden dataset is a combination of 2 Oracle sources, a SharePoint document library, and an Excel file saved on SharePoint. How do I convert my reports to point to the Golden Data Set without recreating the reports?

Hi Scott. 2 things. Firstly, this blog is desperately in need of an update. It is now better to use shared datasets than the approach I describe here. https://docs.microsoft.com/en-us/power-bi/service-datasets-share

In short, you load 1 golden dataset into a golden workspace. From there you can create new thin workbooks directly to the golden dataset without having to replicate them (albeit automatically) to other workspaces.

Second, you can’t copy and paste pages between workbooks, but you can cut and paste all visuals on your page. So create your new thin workbook connected to the shared dataset, then open a duplicate copy of your old workbook containing the visuals, click on a page, Ctrl+a to select all, Ctrl+c to copy, then paste on the thin workbook.

Hello Matt,

Brilliant article!

“Any new measures you create in a thin workbook are stored as part of the thin workbook and are not visible in the Golden Dataset. (Note, you can’t create calculated columns or new tables, ONLY MEASURES)”

(I capitalize the latter sentence).

This is enlightening for me. I’d like to explain my work scenario (currently in a PBI **FREE** environment)

I use this approach to model and analyse costs of my company fleet vehicles: this is the golden dataset, with imported data, ETL, data model and general-purpose measures. Then I created one thin report and add a few local measures. Later, I will create a few more thin reports.

I miss the lack of permission to add tables (in general: to edit data model) of thin reports, but I understand the reason and accept it.

Now the company requirements have changed: they need to analyse cost of products IN GENERAL, not only vehicles. In order to leverage several analysis reports, I should add differents tables and relationship to “children-models”. For example: despite vehicles reports just need one table (purchases, and not much more than this), FOOD PRODUCTS report needs an addictional table (i.e. registered number of customers – we call it “occupancy” – ) and local measures. Well, everything is fine with local measure, but what about custom addictional table? I’m not able to add it at thin report level, and oppositely if I add it to golden dataset there is risk to grow it unnecessarily. Further, addictional custom tables aren’t limited to one, but there are several ones in order to provide specific dimensions to various analyses.

So, the challenge is to generalise golden dataset in order to provide not only MEASURE but also TABLE and RELATIONSHIP, at the same time the golden dataset could not became TOO general and complex.

I still can’t figure out in which layer I could “inject generalization”.

Thank for your attention.

In SSAS (the big brother of PBI) there is a concept called perspectives. You can have additional tables in the GDS and deploy them separately in different cubes. My guess is that this will come to PBI in the coming years. Until then, just add the new tables to the GDS. if it is less than 500MB, it is not really “big” anyway. JMO

Thanks Matt,

I will try this approach.

First attempt at PowerBI here – a simple 2 table SQL Server join query. Found out apparently that there is no way to create a connection to a SQL Server and reuse it for multiple data sets.

Do we really have to create and store credentials for each and every query that needs to be done?

We use a ReportUser SQL Server login, not AD, with access to only the databases and tables needed for reporting.

No AD account to manage, nothing to expire, and a lot less management problems.

What’s MS thinking, everyone needs to manage hundreds of individual database connection credentials in all the PowerBI reports for our large enterprise?

What needs to be done when me, the analyst, moves to a different position in the company and the dozens of PowerBI reports I’ve created are owned by another employee?

Visual appeal is great though where is the TCO considerations? Lightswitch again?

I am sure you are missing something. Credentials can be stored for SQL Server at the DB level and the server level

Interesting question. I can’t say I have ever tested this, but I am surprised to hear it. There is no data in the thin workbook, but it would store the last query in the visuals, I guess. What happens when you interact with the workbook. It then must send a refresh request.

I ended up working with Microsoft Power BI Support. It took a week or more of testing with them. We found that the underlying Master Data Set, if stored on one-drive must be refreshed a minimum of 5 times during the day, even though the underlying data itself had no changes. Now any measure changes in the master set will be immediately updated in the thin client report. Also, If I add/modify/delete visuals in my thin client report, they will now update on the Power BI Service immediately. It was hard to problem solve as the thin client always was updated with the values from the data from the master data set, just new measures and visuals would take a long time. I was waiting up to 5 hours sometimes for a visual modification to show up on my thin client report.

Hi Angela. I am really interested to find out more about this. What do you mean it must be refreshed a minimum of 5 times per day? Do you mean if you refresh it once, then nothing happens?

If I open my thin report on my Power BI Desktop, why does it not update automatically from the Golden Dataset?

I have gone with option #2 of the golden dataset and it has worked very well until recently. Before my golden dataset grew in size, my thin report would update pretty quick. All of my reports are created through Power BI Desktop. Now I am waiting up to 2 or more hours. This has proven a little frustrating as I update or add measures. I can’t utilize the revisions or updates on my thin report visuals for awhile. Would option 1 work quicker? Also, if I went to option 1, can you create a report on the Golden Dataset and save it to a different workspace. I’m not clear on this one.

Yes, option 1 would make the published version immediately available (after it is published). All you lose is the ability to automatically publish to more than one workspace. But you can still manually publish to multiple. You should be able to switch – just publish it manually. If the dataset is duplicates ( same name, twice), you should be able to repoint your existing thin workbook to the new one.

Hi Matt, thanks for sharing the approach – just what I’d been looking for.

Trying to implement it now, creating some thin workbooks but have been experiencing some issues while creating thin workbooks in the Desktop. Just wondering if you’d experienced anything similar or had any ideas?

“Cannot load model. We couldn’t connect to your model in the Power BI service. The dataset may have been deleted, renamed, moved, or it is possible that you don’t have permission to access it. Failed to connect to the URL ‘https://api.powerbi.com’ white attempting to call cluster connection”

I cannot see what I have changed to affect this, and it seems intermittent across thin workbooks. I’m guessing it could just be a temporary connection error to the website, though the most frustrating part is any combination of trying to ‘Cancel’ or ‘X’ the dialog box does not work, so each time requires a forced shutdown of the desktop. Luckily recovered files are pretty good but would be good to understand what’s happening.

Thanks, Patrick

No, I haven’t seen this before. If you are sure you have not changed anything, I suggest you use the diagnostic tools inside desktop and create a support request. File\Options and Settings\Options\Diagnostics

Hi Matt

Super useful trick!

Thanks for sharing.

If you set everything up like this, do you know a way to keep track of which dataset & reports actually link back to a certain ‘golden dataset’? Can we see the original link or something somewhere?

Thanks!

KR

Tom

In Power BI, goto Home\Edit Queries\Data Source Settings. You can see the link and also change it if needed.

We have actually been talking about this solution for our instance of PBI – so very, very glad to come across your blog. But something came up today that I was wondering if you have any experience with. We have a group of datasets that feed individual reports. The report author left the company, and when their AD account was removed, we found ourselves having to scramble to assign ownership of every dataset to someone else because the reports could not load. Have you ever tried assigning ownership of a Golden Dataset to a non-person AD account? Just wondering if assigning to a group would allow anyone within that group to make changes rather than having to go to just one person all the time – and also not having to manage the ownership in the event they exit.

I’m glad this has helped Calvin. Yes what you describe is a common problem. There is a solution to moving ownership using the Rest APIs. https://docs.microsoft.com/en-gb/rest/api/power-bi/ I am not an expert and have never used the API, but I understand you can solve most issues like the one you refer to. Regarding of assigning ownership to a non-person, that may be a licensing issue. Regardless, I think if you follow my advice and always use a workspace with multiple administrators then you wont have a problem. This problem only occurs when people share from their own MyWorkspace. I always recommend people never do that, for this exact reason.

Hi Matt,

Given that MS has introduced Dataflows, will Dataflow replace as the solution for Golden dataset?

What scenarios do you think the solution outlined in theis blog will be still useful?

I am trying to understnad scenarios where the solution here can be used.

Thanks,

Anand

I am planning an update sometime, as I mentioned here https://exceleratorbi.com.au/moving-queries-from-power-bi-desktop-to-dataflows/

The big difference is that the golden dataset is a data model. Dataflows provide data (ie tables) not a data model. The golden dataset doesn’t need data flows per se, as it is an encapsulated, complete solution including data and data model.

Good point… “Dataflows provide data (ie tables) not a data model”. Thanks.

Hi Matt,

How is this Golden Data approach different from creating an App or App workspace?

Or when should we consider App vs. your suggested approach #2 or #3?

One thing Microsoft is often bad at, is its naming of things. I think App Workspace is a dumb name. It is just a workspace – a place to collaborate with others. An App is a “package” of a dataset, reports and dashboards packaged up as an “app” that can be distributed to others, including people outside your organisation. The Golden Dataset is something different. It is a “master” version of the data model that can be deployed into 1 or more Apps and 1 or more Workspaces without having to create duplicate copies. You create 1 golden dataset and the reuse it in may places. Sharepoint and OneDrive keep all uses in sync.

This is a further (third) update to an article …

Interesting information, I definitely like the idea of a master data set that everyone works from. (not too keen on giving private data to MS, Azure, yes I’m paranoid… )

You said this is the third version of the article. Presumably it would be useful to read the earlier articles, but like too many other authors you make it too hard to find. You provide the dates of the earlier articles, but when I look on your blog list, THERE ARE NO DATES, just titles! WHY NO LINKS in this article to the ones you refer to. It is so easy for you to do …

Fair point Ron. The issue is that I didn’t make it clear – obviously. I edited the original articles and re-released them. The old versions therefore don’t exist anymore – that is why there are no links and you can’t find them. I do this deliberately because the alternative is I would have stale content (in this case 3 articles about the Golden Dataset) and I run the risk that people don’t read the latest and greatest. I will try to make it clearer that the originals have been edited.

I didn’t know that. Thanks for sharing.

You are right, these options don’t auto refresh. You need to do it manually, or you can use a tool like power update. I’m thinking now that you could create the golden dataset with connections to dataflows, and schedule dataflows to keep the data refreshed. I might write an update about that.

This is a great article – maybe there is a new way doing this with Power BI dataflows?

It wasn’t that clear to me – how do you refresh your golden dataset for options 2 and 3?

I really just want to create this one ‘golden’ dataset and publish it to Powerbi.com and then have reports in 2 other workspaces using it.

One thing to note is that if you click “save as” on a thin report connected to a golden dataset in the Power BI service, then the new report will no longer be thin as it will have copied and attached the golden dataset to itself. I found this to be a problem when I published a report on a clients tenancy. He made a copy of the report by clicking “save as” and then he did a bit of work on this new copy. His work had to be later redone as you don’t seem to be able to separate the two again

I guess that the new Composite Model functionality is another answer to the question “How can I build 1 master dataset, use it as the 1 version of the truth, but still allow people to do their own thing?” Because now you can use DirectQuery to provision the equivalent of the ‘Golden Dataset’, while still allowing users the flexibility of doing additional modelling via Import.

@Jeff Weir – I was thinking the same thing. Many of my users “do their own thing” but just adding that one or two additional Excel worksheets that are there to just enrich the golden dataset with a few columns of their own. In Excel they used these sheets for “VLOOKUP” purposes. Prior to the July 2018 update, doing the live connection to the Power BI Service meant that users were unable to add their own imported data to this published data model (just as you said.) So that has caused me to shy away from recommending this as a best practice for everyone.

A possible enhancement to this approach could be to store Power BI template files (.pbit) of your saved pbix files in SharePoint as well. If users needed to get a local copy of the golden dataset, they could then get this pbit and refresh. However, they would have to know that they were making a copy of the golden dataset (a fool’s gold dataset?) and there would need to be a clear method to handle these multiple data models with slight mutations.

There is a BIG difference between Direct Query mode and Import Mode, and I wouldn’t want to give up the benefits of Import just to use it as a control mechanism for standardisation – JMO

I m looking for this guide thanks …

What are you looking for?

One thing that worries me from the very beginning – Once I will change my position in the company, is there a way to smoothly transfer the dataset (either loaded to OneDrive or directly to PBI account) to one of my colleagues account, without losing highly developed set of workspaces, reports and group of recipients?

Always create a new App Workspace. That way admin rights can be shared and transferred. Use an internally public OneDrive or Sharepoint folder to store the Golden Dataset. Do those things and all will be fine. Even if you do it in your personal workspace, Power BI admins have tools to make changes and repoint workspaces and workbooks

How to publish a model dataset in Power BI report Server?

Report server is a whole different topic. You need 1) Report Server (of course) and 2) the specifically edition of power bi desktop that is used to publish to the server

I have both conditions, the Reports server and the PowerBI desktop optimized for the report server.

I am not able to import the dataset to the server.

Sorry for the confusion in my reply. You “publish” a report to report server by using the optimised Power bi desktop version. But report server is not Powerbi.com, and I guess you can’t use his technique with report server.

Thanks Matt … this is a very helpful article.

I’ve been using a golden dataset for a while now – loading it to 3 different workspaces and having a separate refresh schedule in each workspace. Had no idea till I found your article that it could be a single dataset “serving” the 3 workspaces.

I’m a bit unsure how this would work with refreshing the dataset via a PBI Gateway. Jerry’s comment earlier suggests this can work, but how would one configure this? Would you configure the dataset in all 3 workspaces to refresh via the Gateway? Or, if you setup the refresh schedule in just one of the workspaces, would all 3 workspaces reflect the refreshed data? Has anyone tried this?

When you load to OneDrive and then import to a workspace, the workspace has its own copy of the data set (3 workspaces means 3 copies – hence 4 in total). Power BI and OneDrive talk to each other. When the OneDrive version is updated, any workspace versions will automatically be updated. So you have 2 options. 1) refresh the OneDrive version and hav eit auto flow through, or 2) configure the gateway for all workspace copies (3 gateway configurations in your case). OneDrive doesn’t have the ability to refresh itself. The file needs to be “opened” and then refreshed and saved. You could do this with Power Update ($500) or just use the gateway on all copies.

Thanks Matt. I’ll give it a try. Thanks again for the great info.

Is the only difference between storing the model (ie dataset) in the cloud (onedrive/sharepoint) verses on premise with a gateway (when you want to publish to multiple workspaces): When changes are made to the model the cloud options will automatically update the model in ALL workspaces and the on premise version would have to be published manually to each workspace where the model has been previously published?

There is no difference in the refresh scheduling, correct?

For both on premise and cloud datasets deployed to multiple workspaces you still have to setup maintain the refresh schedules in each separate workspace, correct?

There is no difference in permissions to make changes to the dataset, correct?

The users have to have edit permissions in the workspace, they therefore can download the dataset, make changes and republish and override it?

Thank you.

Hi Matt,

Great article.

I just want to add some demo I have done so far based on your article.

I’m always looking for this kind of Golden data set, but also a Golden reports (then customize for each app). So with the help of your article, I finally “almost” there. I have tried to pass by the tabular model but all the reports are disappear (pbix > Azure Analysis Services > obtain tabular model) so I dont really appreciate this method.

So my approach to have golden dataset and reports is :

1/ I have a golden dataset with all the reports for different group of users but each group will use only a subset of reports and I dont want to have them in different places so I have only one pbix file (the viz works perfectly with RLS so I dont need to apply filter on group of business users)

2/ With the help of your article, the onedrive master pbix file synchronizes with their “Import pbix” ‘s file. But I dont want them to sync (I dont want the users see reports that are not belong to their business) so I turn off the sync One Drive option, activate Schedule Refresh.

3/ By doing that, every time I want to modify the report, I can just go and modify the original and then activate sync, and by the end I will delete the non-necessary reports for this app.

Let me know what do you think about this approach!

Thanks again for this great article.

Dung Anh

Nice article, Matt. I work in an organisation that hasn’t yet fully come to exploit what Power BI has to offer and hence didn’t really have the chance to see this being used. I created something similar to what you have described before the connect to Power BI feature was made available

The inability to view the data model and the relationships will be a pain-point to create custom measures. You mentioned about migrating measures to the Golden Dataset? Is this something as trivial as sending an email to the “owner” of the Golden Dataset to add in the desired measures? Or is it something that will be uploaded to the thin report on PowerBI.com

Also, if the golden dataset as row level security enforced, would that affect creating custom measures in some way?

Thanks

Yes, I think the process to migrate measures could be a simple as an email. They key is that everyone needs to know what to do. As for RLS, that is a great question – I have never tested this, but I assume that the new thin workbook would have the golden dataset RLS applied for the user of the thin workbook. Nothing else would really make any sense from a design perspective.