I have had the idea of power query self referencing tables in my head for over a year, and today was the day that I tested a few scenarios until I got a working solution. Let me start with a problem description and then my solution.

Add Comments to a Bank Statement

The problem I was trying to solve was when I download a digital copy of my bank statement, loaded it up into Excel using Power Query and then wanted to add some commentary to some of the transactions. If you just add the comments to the source file then everything is easy. But it is often not practical to add the comments directly into the source file for a number of reasons. This could be because you get a new copy of the source data each time you refresh (eg you get a CSV file that replaces the old file, and the file just gets larger each month), or it could be if you are using a “combine multiple files” technique and you only want to deal with a summarised, cleansed version of the final data instead of dealing with the source files directly.

Once you have loaded the table in Power Query, it is possible to add a new column to the resulting table (shown below). The problem with this approach is that the comments are not logically linked to the rows of data in the table. Take the example below. The data is loaded from table 1 using Power Query, into table 2 on the right. I then manually added a new comment column and added some comments (as shown below).

The problem that can happen is demonstrated below. If the sort order of the source table changes and then you refresh the query, the comments no longer align with the original data. And this is despite having a unique ID column in the original table.

The problem is that the new column I manually added to the Power Query table on the right is not logically joined to the actual table, and hence the comments are actually in a column next to the table rather than part of the rows in the main table.

Enter Self Referencing Tables in Power Query

I read this blog post from Imke quite some time ago, and that was what gave me the idea on how I could solve this problem. The idea is to load table 2 above a second time (after adding the comments), and then joining it back to itself, hence logically joining the manually added comments to the rows in the main table.

Note: Prior to completing the self referencing steps below, I used Power Query to create the second table from the source table. This was my starting point.

Then I loaded the resulting table 2 a second time as follows:

- Select in the second table

- Go to the Power Query menu

- Create a new query using “from table”.

I renamed this new query “Comments” and selected “Close and Load To” so that it only created a connection but didn’t load the new table to the worksheet.

The next thing to do is to merge the original query with the new query. To do this,

- Go back and edit the original query for table 2

- Add a new step to the query using “Merge Query”

- Merge the second “Comments” query with the original query by joining on the ID column as shown below.

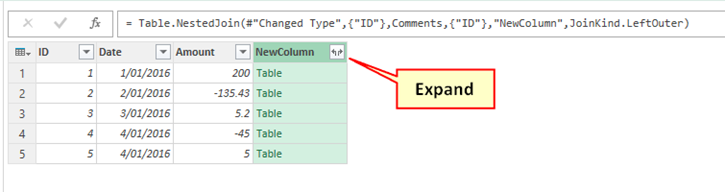

This will give you a new column in your query, and the column contains a table (as shown below). You can then expand this new column to extract the comments.

I only extracted the comments column, and deselected the last option as shown below. Note, it is important that you deselect “Use original column name as prefix” so that the new column has the same name as the original source column.

Click OK.

Edit May 2020. Power Query is always changing. It seems there has been a change which introduces the need for another step at this stage. After expanding the new column, you will see that Power Query renames the new column and calls it Comments.1 (shown below in 1).

You need to edit the code shown in 1 and remove the .1 so the new column has exactly the same name as the original table you loaded.

After you have made this change, you can then close and load.

When you look at the table now, it all looks the same as before (see below), but there is one important difference. The last column in the Power Query Table is now a part of the table and not a manually added on column. You now have a self referencing table. EDIT 2017. If you get a new column called Comment2, just delete it. Everything else will work. There was a change to the way Power Query works at some stage and this duplicate column with “2” appended now appears. But you can (and should) just delete it – it will then all work.

To prove this, I completed the same sort test as before. First I sort the source table, then refresh the Power Query table – you can see the results below. Note how this time the comments stick with the row in the table – sweet!

Incrementally Add Comments

Now that the queries are all set up, you can incrementally add comments to the Power Query table any time you like. And you can also add new data to the source data – it will all work as you would expect as shown below.

Real Life Data

The above demo is of course test data. A more realistic real life scenario would be to download a CSV file from your bank, and use one of the many “combine multiple files” techniques to import the data. It is likely that your source data is messy, and contains lots of columns you don’t need. In this real life scenario, it is not easy to add comments to the source data unless you open each file and add the comments there – this is not ideal. Another problem could be that your data source could be a CSV file that uses a “delete and replace with the new larger file containing the latest records” method. In both of these scenarios, you will need to have your manually added comments located somewhere other than the source table. The solution provided here is a simple and intuitive way to manage this for the user (albeit with a little bit of setup first).

The example shown in this post will work just fine as long as 2 conditions are maintained.

- You have a unique ID for each record

- You don’t accidentally load duplicate data – so don’t do that!

But What if I don’t have an ID column

The next logical issue you may have is a scenario where you don’t have an ID column. I tested quite a few scenarios including adding an ID column inside the first Power Query step (add index column). I haven’t been able to find a solution that works 100% of the time, but there is one that can work for most people. If your data is such that each row in the table is unique (eg date, maybe a time column if you are lucky enough to have this, amount of transaction, remaining balance etc) you could create a unique ID by concatenating these columns into a single unique column. This should solve the issue for most people in non-commercial scenarios (those in commercial scenarios are more likely to have a reference ID anyway). You could even create an audit query that checks for duplicates and warns you if this occurs, but that sounds like another blog post.

I hope you find this article useful, and or it inspires you to come up with your own innovative ways to use Power Query.

Here is an article that shows how to use a similar approach with rows instead of columns, effectively creating an incremental refresh of data. https://blog.jamesbayley.com/2018/04/23/power-query-how-to-load-only-fresh-rows-and-create-an-excel-history-table/

If you want a comprehensive lesson on how to use Power Query, checkout my training course here https://exceleratorbi.com.au/product/power-query-excel-power-bi-online-training/

3. Water-Saving Fixtures

With water scarcity a concern in many Indian cities, commercial bathrooms

should be fitted with

low-flow taps, sensor-based urinals, and dual-flush cisterns.

These reduce water usage while

ensuring efficient operation.

4. Touchless Technology

Post-pandemic, touch-free fittings are becoming the new normal in Indian commercial bathrooms.

These include:

Sensor-based faucets and flush systems

Automatic soap dispensers and hand dryers

Motion-sensor lighting

Brands like Jaquar, Hindware, and Kohler India offer a wide range of sensor-based

commercial

bathroom fixtures that are both modern and reliable.

Thanks so much for both the explanation and the walkthrough, Matt! I had found Imke’s description first, but admittedly yours had enough details to actually implement. For my own use cases, I’ve been able to change all this into a single wrapper that I can quickly apply to any existing query. I -think- it’s stable enough to share, so I’ve posted via github.com/dentonlt/ReflexiveQuery. Perhaps something useful for others on the same search. Thanks again for maintaining this particular page over the past (nearly) ten years – it has been invaluable!

I think this is among the most significant info for me.

And i’m glad reading your article. But wanna remark on some general

things, The web site style is great, the articles is really nice :

D. Good job, cheers

At Intellect Outsource, we offer expert Amazon product upload services to streamline

your eCommerce experience. Our team specializes in accurately uploading product listings, optimizing titles, descriptions, and keywords for maximum visibility.

With a deep understanding of Amazon’s algorithm, we

ensure your products reach the right audience. Whether you’re a

startup or a large business, we handle bulk uploads,

product categorization, and inventory management to boost your online sales.

Let us take the hassle out of managing your Amazon store,

so you can focus on growth and customer satisfaction.

I wasted weeks on YouTube and forums before discovering kotelinaya.ru. It cut through the noise with diagrams, real house examples, and clear pros/cons for each kotel type. It’s rare to find such a well-organized heating resource in one place. Highly recommended before spending a cent.

I think everything composed made a great deal of

sense. However, what about this? suppose you wrote a catchier title?

I ain’t saying your content isn’t good., however

suppose you added a title that grabbed folk’s attention? I mean Self Referencing Tables in Power Query 🔗- Excelerator BI is a little boring.

You could look at Yahoo’s front page and see

how they create article titles to get people to open the links.

You might add a video or a related pic or two to grab people interested about what you’ve written. In my opinion,

it would bring your website a little bit more interesting.

Art of Travel, una de las principales agencia de

viajes en India, se especializa en crear experiencias de viaje personalizadas.

Con un profundo conocimiento de diversos destinos, ofrecen paquetes turísticos personalizados, que incluyen viajes de

lujo, de aventura, culturales y patrimoniales. Desde arreglos de viaje perfectos hasta itinerarios únicos, Art of Travel garantiza un viaje memorable para cada cliente.

Su equipo de expertos brinda un servicio de primer nivel, lo que los convierte en la opción preferida para los viajeros que buscan una experiencia de viaje excepcional y personalizada en toda la India

y más allá.

Real advice, not just theory — great explanations about pressure, fuel, and layouts.

If you’re planning renovation, this boiler blog is gold.

It is really a nice and helpful piece of info. I am satisfied

that you simply shared this helpful information with us. Please keep us informed like this.

Thank you for sharing.

Your mode of explaining everything in this paragraph is really pleasant, every one be

capable of simply be aware of it, Thanks a lot.

Looking for real-world heating experiences? I used this site during my build.

Yo, creative soul, I literally just found something shockingly creative, I had to pause my life and share immediately.

This article is not your usual stuff. It’s packed with smooth UX, creative magic, and just the right amount of pixel sass.

Suspicious yet? Alright, go look right on this link!

Didn’t click? Fine. Imagine a caffeinated unicorn whipped up a site after arguing with ChatGPT. That’s the chaos this thing of wonder gives.

So don’t be shy, and share the love. Because on my Wi-Fi, this is worth it.

That’s it.

Found a great walkthrough on installing heating — worth checking.

Is there a way to do this merge, with a query that updates whenever new documents are added to a library? We have a folder that regularly gets new documents received from clients. We run the query to keep updated on the list of files, but we also review the documents and add comments. When new documents get added, the query refreshes and shifts the cells away from the comments. I’ve been able to merge the tables, but can’t understand how to achieve that merge with a live query that will constantly refresh. Thank you!

Basically, yes: this is what the original post is all about. The approach does work: I’ve been using this solution (a reflexive query) for a similar problem for a couple years. I saw your comment as I decided to revisit and optimise things a bit. It is even possible to do this in a more compact and generic way (so that you can transfer the code to other tables/queries), provided you don’t mind typing in the M directly. Too bad to see all the spam comments! Good luck –

Здесь вы сможете найти поставщиков удобрений и агрохимии – 306 производителей – https://mymoscow.forum24.ru/?1-6-0-00029765-000-0-0-1750072583 – минеральные удобрения оптом

For latest information you have to go to see world wide web and on internet I found this web site as a finest site for hottest updates.

my blog post … http://www.mandjphotos.com

Good breakdown of what to consider when choosing a boiler — very helpful blog.

Hello superb blog! Does running a blog like this require a great deal of work?

I have virtually no understanding of computer programming however I was hoping to start my own blog in the near future.

Anyhow, if you have any suggestions or tips for new blog owners please share.

I understand this is off subject however I just wanted to ask.

Many thanks!

awesome

Hi there, I found your site via Google while searching for a comparable

topic, your site came up, it seems great. I have bookmarked it in my google bookmarks.

Hi there, simply changed into aware of your weblog through Google, and found

that it is really informative. I’m going to watch out

for brussels. I’ll be grateful in case you proceed this in future.

Many folks shall be benefited from your writing.

Cheers!

Wow, gorgeous site. Thnx …

https://www.youtube.com/@DaftTaengk

https://www.youtube.com/channel/UCPCLl1O9M4-Rw243NjOlBLA

https://www.youtube.com/@BIMONG

https://kleonet.com/entry/사찰넷-불교-일자리-구인구직-사이트-바로가기/

https://www.youtube.com/channel/UCJLXoLNzHeK70WCJlQNYf-g

Заходите https://chistka-mebel24spb.ru

Заходите Мойка окон после ремонта цены

Уборка офисов в Питере! Чистота – залог продуктивной работы! Экспертный клининг. Выгодные условия. Набирайте наш номер! Нажимайте https://uborka-ofisov24spb.ru

Финишная прямая чистоты? Забудьте о строительной пыли! Идеальный порядок апартаментов и коттеджей. Быстро, тщательно, надежно! Тапайте https://klining-posle-remonta24spb.ru – Клининг СПб недорого

https://www.youtube.com/@영스타미디어

Сияющий дом в СПб! Уборка квартир, блеск в офисах, свежесть в коттеджах. Профессионально, быстро, качественно! Время для себя – ваш подарок! Нажимайте https://uborka-spb24top.ru

https://www.youtube.com/channel/UCe0Qrv4HvRK_iup3X_cNdBA

https://www.youtube.com/@가요여행

Good instructor pornography, keep it up!

For those getting the second “2” column when refreshing, make sure that the new data you are entering (such as comments) is to the LEFT of your unique ID. I tried the above method with my new data column to the right of the ID and no matter what I would do, it continued to create a “2” column.

I then started over, with my new data column at the end of the table (to the LEFT of the unique ID) and it finally worked. I was also able to move the newly created data column to the right within PowerQuery (instead of the resulting table) and that ensured that the newly added data remained, while also preventing the second “2” column from being created.

I hope this helps someone, as I was banging my head against a wall trying to figure that one out.

Thank you

Hi Matt,

The table will refresh, but all the comments I have added are gone. What did I do wrong?

Doesn’t work for me. When I hit refresh nothing refreshes.

I have a source table that has rows removed/added etc. When I remove one, it isn’t removed at the PQ table anymore.

For example. Column A is product code, Column B onwards is forecast. The forecast is hard-coded, column A is pulled from Power Query.

Doing the above steps, when I remove a product code, it doesnt remove in the Power Query anymore. It just remains static?

Matt,

This works great but the merge query is adding another “Comments” column called “Comments2”. I’m not sure what step I’m missing or messed up.

I’m combining multiple worksheets into one and then adding a column manually.

I then use your steps above to keep everything in line with the new data that comes in from those other sheets.

The sheets that I’m combining are getting data from multiple SharePoint lists.

Everything is working great except for the extra column that is getting created with this query.

People sometimes report issues, but I’ve never been able to reproduce them. Did you manually delete the extra column in the final table? Was there only a single column in the merged table in PQ?

I have the same issue. I don’t know the exact reason for this new column, but deleting the column “Comments2” in excel is solving it. It makes sense it solves it as then the column is not taken into consideration during the next refresh.

I would love to know exactly why.

I am using french international settings.

I am using a simple CSV as source.

Hi Matt,

This has worked great for me. However I am now in the situation when my unique ID can be shown as a row duplicate times. And I need to keep those duplicates as they refer to different status for that same ID. For each status I add a unique comment. However the issue is when I refresh the data, the comments for the first unique entry now copies over for all rows with the same unique ID. Is there a way around this?

Nvm. I see the response to my questions in an earlier post. I ended up combing the ID with a unique date column and that solved the issue. Thanks!

I’ve got one more variable added creating a problem for me. I’m able to make this work as outlined, however I already have a separate table created from the original Microsoft Form. We are creating a new form now. So in essence i am struggling to get the “original” data pulled in with the “new” data; while still having all the features discussed above. For some reason it gets angry when I try to self reference the final table and it won’t let me pull in the comments during the merge.

So what I have in my queries now is “New Data” – Query to table (pulls from the new forms sheet to this reporting sheet), “Original Data” – Connection Only (Copy and Paste of values History from the previous forms sheet, has many custom columns that I deleted in PQ), Append1 (appended New and Original), Append2 – connection only of Append1 with the custom columns. ***This is my failure point, it won’t let me pull the custom columns during this final merge***

Where am I going wrong?

Hi, Matt, just to thank you for this article. I made 3 self-referencing tables and all worked perferctly! Very routine work now is over. My tables are like a living organism now.

Thanks and all the best!

Hello Matt,

Your explanation is really good. It worked for me. Tons of thanks.

It’s just I have added another step in manually entry. I prioritize by highlighting cells(Fill colour) in a specific column wherein I enter manual data. Whenever source data gets updated manual data sticks to its position wrt to ID but color gets left behind, probably at same Row number it was at earlier.

How to manage this issue?

no idea, sorry. It seems colours stick to the cell, not the data.

I have spent at least 20-30 hours trying to figure this out on my own. This was perfect and is going to save me SOOOOO much time and effort. THANK YOU!

Hi Matt,

My source data is from an Excel file on the web that I load into Power Query, cleanse the data and load to an Excel sheet. Is this solution still possible if my data is from a query already? I’m not sure how I would do this based on the above.

Thanks

The source is not that important. What is important is that the source has a unique identifier for each row of data. If this does not exist, the results will be inconsistent (ie potentially wrong). If you don’t have a unique ID, you can possibly create one by concatenating the date, descr and possibly the amount.

I found this after struggling to figure out this exact problem. Funny how little information I was able to find before coming across this post.

Adding an index column has now helped me with two unique issues.

I just wanted to pop in and thank you for helping me look like a superstar at work!

I’m glad it helped.

Works, but not with the dynamic “threaded comments” feature, those comments still get out of sync.

Great content, thank you.

I have one concern though. Some time the bank is resending the same ID record with an updated content. (say, by international transactions they send the record first with estimated charge aka. deposit and on the real charging day – maybe T+2 – they send the actual values with the same reference No. [ID]) so the requisit “You don’t accidentally load duplicate data – so don’t do that!” can not be mainaned.

Can you share your idea, how to solve this issue?

Thank you,

Lajos

I believe you have two options here.

1. you want to keep both records of the duplicate data – then use the concatenation technique mentioned above, combining [ID] and [Transaction Date] to create your unique identifier.

2. you only want to keep the final record – then I suggest leveraging Table.Buffer() to force a sort descending on the data

https://exceleratorbi.com.au/remove-duplicates-keep-last-record-power-query/

This is SUCH a wonderful solution. Had to do something for a client and this made it so easy to produce the desired outcome. Especially the tip to create a concatenated field for the join. Thank you for sharing!

Matt, this is brilliant, I spent a fair amount of time trying to come up with a similar workflow. THANKS!!!!

@Elisabeth I feel your pain. I would recommend you create your index/key column as your first step in the original query, close and load to Excel, add your user defined columns, take that table back into PQ, save as connection and merge to the original query and add a step to remove duplicates to avoid recursion (the doubling you describe) before adding any subsequent transformations. If a refresh creates new columns delete them and repeat as necessary until your data is maintained where it should be.

@Elisabeth I feel your pain. I would recommend you create your index/key column as your first step in the original query, close and load to Excel, add your user defined columns, take that table back into PQ, save as connection and merge to the original query and add a step to remove duplicates from the index/key column to avoid recursion (the doubling you describe) before adding any subsequent transformations. If a refresh creates new columns delete them and repeat as necessary until your data is maintained where it should be.

Hi,

when I do this, it doubles the amount of data. Any suggestions?

Hi Matt… thank you for this great training.

I used the steps to merge the two tables, however, I have some formula in a couple of columns of the second table that are being removed by refreshing the query. Wondering if there’s any solution to add them to the expanded table. In my case, I need to evaluate four columns data from the second table (not the data source), i.e. if (sum(col 1, col 2, col 3, col 4) <= 4, then "low", …)

I tried to follow the tutorial first by mimicking the examples and it worked great!

But when I apply it to my own table it doesn’t work and duplicates the report entries every time I hit refresh.

when you say “duplicate report entries” do you mean the rows are duplicated? If so, my assumption is you do not have a unique primary key on the source data and the left outer join is matching 1 to many

“If you get a new column called Comment2, just delete it.”

There is more to this! With the current version of Excel, you WILL get a Comment2 column because of how smart tables work. BUT in deleting it, you’re not deleting a “duplicate” column, you’re actually deleting the ORIGINAL comment column you added at the beginning. You can see this if you format the original comment column before continuing on with the steps. When you load the query after merging with the new self referencing query, it loads WITHIN the area set by the original table, thus pushing the original column out to the right and adding a 2 to the end of the ORIGINAL column’s name.

This is important, especially if you have multiple comment columns within a query table. It’s important to delete the original comment columns, remove the “2” from the end of the header name, and save. This will ensure the comments stay aligned, no matter how many comment columns you have within the table.

Essentially, you end up using a connection only power query to set up a column with free-form that can be entered directly onto the excel spreadsheet. Like a custom column!

This is VERY important, should be added to original article!

” This is important, especially if you have multiple comment columns within a query table. It’s important to delete the original comment columns, remove the “2” from the end of the header name, and save. This will ensure the comments stay aligned, no matter how many comment columns you have within the table ”

I don’t get this, what needs to be done when you get Comments2 column?

In Excel, in the green table (results from the query), click in the duplicate column (called Comments2) and delete the column completely from the table.

Hi Matt,

I am continuing to get duplicates as well. I created a source query from my master query and then pulled out a connection only table from the source query. It then merged my soure query and connection table together, and I am getting the duplicate columns for the 4 “comment” sections I added. I tried to delete out the 4 original ones, but whenever I refresh, still adds. I don’t understand what you mean by “unique value” because it is pulling from my other table to add those 4 columns. Any insight is greatly appreciated!

THANK YOU…. Big thank you to everyone. This is amazing and it saved my work!

At the moment I am copying my source to my table. I am wandering what will happen if I take my source from Sharepoint. Would it make a difference?

As long as the unique ID is in the file, you should be able to move it. Changing source can be challenging. I normally recommend reconnect from scratch to the new source until you can see the basic table, then cut and paste the advanced code that does that with the original query. Just be careful and backup first

Hello G, I’ve got a pb with what you wrote :

“adding a 2 to the end of the ORIGINAL column’s name” and the next line “It’s important to delete the original comment columns”, but “remove the “2” from the end…”

So we have to delete the original colomn, which contains the 2 at the end, and after delete the 2 at the end of the previous column we delete ? I don’t get it, can you tell me which column have to be kept : the one with the 2 added (and rename it afterward) or the one without the 2 ?

Thanks in advance

Is there a way to keep self-referencing table columns from getting out of order upon power query refresh? My power query source table has about 10 columns. In my new table, I add multiple columns that self-reference the power query table columns. Ideally, these added columns would remain next to the column they are referencing. However, when I perform a power query refresh, the columns get out of order.

Thank you for this Matt, this was an absolute lifesaver for one of the reports I am producing.

When I did this and added my Comments tab, then connected PQTable2 to itself, it ended up giving me two note columns?

Delete the second one from the table.

Issue I’m having is if I put numbers in the cells everything works as above but if I enter text it disappears

Hello! I have the same issue. Can anyone help with this? Many thanks!

Hi Mai,

I am unclear on what your challenge is.

I have just tested, and everything is working as expected.

Have you confirmed your technique is working with a simple test data set like Matt used above?

The only way to get this right is to include the comments fields on the source data. Once done, you can refresh as many times you want and also add multiple comments with no issues.

Thanks for the tutorial. Between this and several others, I’m pretty sure I understand how to do this for a practical use verses practice.

The part I’m having trouble with is if a line in the source table is deleted. When that happens, the matching data in the Query table is also deleted, but any data in the comment column isn’t, causing the data in the comment column to be misaligned in relation to all the other data.

Is there any solution to this? There was one youtube video that made it work, but I couldn’t even get a proof of concept of that to work for me.

Interesting. I have never considered that. My guess is you could filter missing lines in the source table before the join, or after the join.

Yes, indeed there were duplicates values to begin with. It’s all good now – thanks a lot and happy Friday.

Hi Matt, thanks for the tutorial.

It all seems to work, but when I refresh my data then the number of lines increase in number, and there are duplicate values of the ID column.

I start with 44,000 rows, which I get through an SQL query. When I refresh the data, that number turns into 98,000, and when I hit refresh immediately afterwards, it turns into around 918,000 rows. After that, it’s in the millions.

Any idea why? Thanks in advance.

Yes. You must have duplicates in your ID column. This only works if you have an ID column with no duplicates

I have successfully completed this process again just now. There is a slightly different behaviour. After joining the comments table back on itself, PQ returned a second column into Excel call Comment2. I simply deleted this table and the process worked as otherwise described.

when I hit refresh the Comment2 column just reappears. I have hidden the Comment2 column to get around it, but it is still there.

This is great, but it completely wipes out the “table 2” sorting and formatting each time I refresh. Is there a way to preserve my table layout?

Add an Index prior to the merge and then sort on the index after the merge – then delete the index column.

This doesn’t work anymore. Power query creates 2 of the same column even if you remove the “.1” in the code.

Hi Matt,

Thanks for this article!

“The example shown in this post will work just fine as long as 2 conditions are maintained.

1. You have a unique ID for each record

2. You don’t accidentally load duplicate data – so don’t do that!”

By loading duplicate data you mean that it will not work because it will have the same ID ? So everytime I export my CSV, I have to make sure it inclues only new data ?

Thanks

Daniel

I’m not really sure what I was thinking when I wrote that. Every row must have a unique ID. By definition, this means no duplicate data. The Join is on the ID column, so if it is not unique, it won’t work.

Hello Matt,

this tutorial has aged very well! Thanks for the great explanation.

But is still have a problem i can not solve …

I am using an ID by concatenating 3 different columns to have a unique Key.

Everything look great until i add a new row at the end of the source table and refresh the data, two different behaviours happen. (I am not chaning anything else in the source data, no sorting etc)

a) the refresh works properly

b) depending on the actual sorting of the output table the comments are to referenced incorrectly :/

Any clue why this happens?

I’ve never tried it. My only thought is that the first step at the source does not have the key, and I assume the second step creates the key. How about drop the key idea and do the join on all 3 columns? That’s what I would try

Okay did the whole worksheet from scratch and removed any unesseary M-Code like “Change Type” …

Works like a charm right know.

Can not tell what was the problem in the first place …

PS: Is there a way to keep the coloring of certain rows referenced, too. For example i want to mark certain rows in “blue” w/o using Conditional Formatting. But the row don´t stick to the color when chaning the sort order of th ouput or adding data to the source.

Cheers!

I can’t think of a way to colour the rows.

“To prove this, I completed the same sort test as before. First I sort the source table, then refresh the Power Query table – you can see the results below. Note how this time the comments stick with the row in the table – sweet!”

After you sort the source table and refresh the power query table, the two tables aren’t identical: the power query table is “sorted” differently. How can you solve that problem?

If the problem is the sorting, why sort? I’m not saying you “need to” sort, I was just demonstrating that the comments stick. If you don’t want the order of the table to change, don’t sort it.

Hi!

This was a very clear explanation – thank you!

I’m trying to solve a similar problem with a caveat. Using your example I’ll try to explain it. What if the dates column was being populated from another data table – so when I refresh the base table, new dates are added out of order? In this example, my comments don’t stay linked to the right id’s – is there a work around? Will this strategy only work if new data is appended at the end?

Thanks!

So you mean data is coming from 2 separate tables? I can’t see how this will work. I suggest editing the source files with the comments

Hi there : )

thank you for the great tutorial!

You mentioned an “audit query that checks for duplicates and warns you if this occurs”.

Have you ever written a blog post about this?

I would like Excel 2016 to notify the user or if possible reject the tables if duplicates occur.

Unfortunately it’s not guaranteed that my ID’s remain unique.

I have included it in our paid training course on Power Query at http://skillwave.training, but I have never done a blog per se. This is what I would suggest you do.

1. create a staging query to the dimension table, set it so it doesn’t load.

2. create a new query that references 1, select the key column and set it to remove duplicates. If the are duplicates then it will delete one of them (the first one). You won’t have control, but at least it will work.

3. create another new query that references 1, use GroupBy on the key column, and count rows. Filter to keep only rows that have a count of 2 or more. Load this to Excel and monitor it for duplicates.

When doing this in Power BI, you can auto send an email. You could do this in Excel, but you would have to use some VBA.

Hope that helps.

Thank you! It was very helpful.

Please help! I’m going crazy here. I have 5 tables I’m brining into Excel then merging together via PowerQuery with a full outer join; I’m attempting to use this as my source data. Following the steps outlined above I can now get my comments to reliably stay linked with the table when working through it (resorting, etc.).

My problem becomes that when I refresh the underlying data my comments move around and are no longer linked. I’ve tried adding an index column to the PowerQuery Source (i.e. the 5 merged tables) then repeating the steps. Unfortunately I don’t have a single unique value across all 5 data sets as somethings in data set 2-5 might now have a matched value in data set 1, and thus are mapped to a ‘blank’ entry for dataset 1. This is important to me as I’m attempting to audit and identify items that exist in some number of these data sets and may not (today) exist in the first data set, but should.

Update: I think my issue is that the original comments columns are not part of the Power Query table I’m editing them on, and no matter what I edit I cannot get the newly merged comments columns to ‘overwrite’ the existing ones. so I end up with something like

Original PQ Source Table + Unlinked Comments Columns (e.g. Audit.Audit Recommendation) + merged indexed comments columns (e.g. Audit.Audit Recommendation 2).

I’ve reviewed the code in the Advanced editor and the comments columns are named the same as the unlinked comments columns, but no matter what I do they still appear with a number after them in Excel proper.

You must have a primary key in the source to make this work, you can’t add one in PQ. One way to create one from the source is to add a new merged column containing multiple columns that together make the record unique. Eg, you could Add Column, Merge Column and use Date, time, amount, description to create a unique key (assuming you don’t have 2 transactions the same across these 4 columns.

I tried adding a custom column via PQ with a formula adding the foreign key column from each data set to create a unique primary key, but I ran into the same problem.

For reference the data sets I’m working with are asset listings off various systems:

| ds1 | ds2 | ds3 | ds4 | ds5 |

| asset1 | asset1 | asset1 | asset1 | asset1 |

| asset2 | asset2 | | | |

| | asset3 | | | |

| | | | asset4 | |

I’m struggling as my data sets don’t have a native primary key outside of the name I’m using to perform the full joins to begin with. In typing this out I’m thinking my best bet might be to go back to the 5 tables I’m merging together and creating and somehow merging the asset name into a single column from each dataset to create a primary key in a single column.

P.S. I apologize if my thoughts are a bit scatter brained it’s almost 1:30 am here and I’ve been struggling with this same problem for the past week and have put well over 40 hours into it at this point. On the plus side I went from very little PQ knowledge to a workable understanding.

This is such a brilliant idea! thank you!

Is there a way to do this multiple times in the same workbook? So that I can have say a new table for each month with only the comments for each month changing?

Please help !! I have a set of data that I am bringing in a dynamic sheet and referencing the application number as my index and followed your steps many time and when I add a column or delete a column based on the refreshed data the notes move around ?

Also some of the time when I do this I also get the duplicated columns with the date lastcontacted2 for example it does not show in my query results just in the table.

Do you have a unique id in the source data that you are using for the join? This is mandatory, and cannot be added in power query

Hi Matt,

This is brilliant thank you so much/. The issue I am having is what if I want to type over something I have already typed. When I do this the new typed data disappears on refresh and even though the query will update an error occurs.

Thanks!

Laura

Wow. This article is nearly 5 years old – I never thought of that. Did you try deleting the text and refreshing, then adding new text? Not sure if that will work. Another option (more robust, but more hassle) is to maintain a second table with the unique iD and the notes, then join them in the query. This is actually rock solid, but you have to manage the second table. I guess you could streamline it with some VBA. That may be another post one day.

Thanks Matt,

If I used your second option will the unique identifiers have to be manually maintained. We are using this to follow up quotes so I have a unique identifier. If I have the quotes numbers maintained as a query then I am going to have to make the second table a self referencing query so I will end up with the same problem?

Laura

I found a solution to the problem here

https://social.technet.microsoft.com/Forums/en-US/e1c18dfc-fff2-4621-96a6-bd1a7a3903f4/how-can-i-add-a-new-column-to-the-workbook-so-data-can-be-entered-in-addition-to-the-results-loaded?forum=powerquery

The only real differences to your solution is that I delete the extra data in the connection query and I also specify what sort of data is in the connection query. I suspect it is the second part that has mad the difference but I don’t know why….

Thank you Matt for this awesome tutorial! It definitely opened my eyes for something I didn’t think it was possible with PQ. I had the same issue as Laura though and adding these two steps to the process resolved any issues and I was able to sync the columns both ways successfully. Thank you Laura for your addition, it definitely helped me a lot!

Fantastic! This has been a life saver for me – I work for a retailer and sometimes we need to pull a list of returned goods that needs investigating from our SQL-server, for them to be investigated and manually assigned a category in Excel. The problem was, that when I would refresh the data set to pull new orders that needed investigation, the manual assignment got misaligned and put onto the wrong order. Using this, I was able to keep it correctly aligned when pulling new data from our server. Thank you so much.

Do you know if this is possible in Power BI during source import queries?

I’m not sure what you are asking. This article is essentially the user updating the source manually. If you are looking for write back, you should look at https://www.acterys.com/

This has been a huge help! Thank you! Not sure if this thread is still active, but here goes nothing. I’m working in Excel 2016.

When removing the .1 after you complete the merge and expand the columns, I get an Expression.Error: The field ‘____’ already exists in the record and it won’t run the query. When watching a video that was posted in the comments, after the .1 was removed the columns just popped together.

Any advice? Appreciate the help and the article!

The results do vary from time to time as the versions change. I don’t have the magic answer – you will just have to experiment and see what you can do.

Thanks Matt. Appreciate the response!

Did you ever figure out a fix for this Derick? Followed the above steps and always get the same error when removing the .1. . Am using Excel v2306

Thank you, Matt! This really helps?

Do you know how I can insert a row manually in the source table so that the comment shifts as well?

This solution has a source file and a comments table. I don’t see how you could add a row in the comments table as the comments table is just a copy of the source file. If you wanted to add new records, I would probably take a different approach and create a second table of manual records and append that to the source file data. It would mean you would have to uniquely ID each new row of data from any other in the source.

This is Great! Everything seems to be working as it should except for when I input formulas into the cells of the new table. When just entering data and I hit refresh, everything stays. However, when I input a formula into one of the cells and hit refresh, the formula disappears in the formula box and it is replaced with the result of the formula. Any thoughts as to why this is happening?

Yes. You can’t use formulas. The data in the table gets loaded into PowerQuery and then reloaded back into the table. During that process, any formulas will be converted to text. There is no way around this for this process

I solved this by creating a Macro, that refresh all my data and afterwords creating the formula and copy it into all cells. (Formula must be the same for all rows). That way the user has also a button to refresh data without going to Data -> Refresh all

See below my copy.

Sub CopyFormula()

‘

‘ CopyFormula Macro

‘

ActiveWorkbook.RefreshAll

Application.CutCopyMode = False

Range(“I2”).Select

ActiveCell.Formula2R1C1 = “=ExtraUren([@[Protime per week]],[@Verschil],[@[Volledige naam]],[@GOEDKEUREN])”

Selection.AutoFill Destination:=Range(“Overzicht_reg[Extra Uren]”)

Range(“Overzicht_reg[Extra Uren]”).Select

End Sub

My function is also custom made

This doesn’t work if you have large data (long refresh time), then you need to refresh data separably from the formula copy.

Unnecessary to do this, explicitly ignore the column containing the excel formula in your PQ code – don’t return a table which contains it.

Hi Matt,

Thanks for publishing this post! It is exactly what I needed. However, upon completing it I noticed that my data is self duplicating random rows upon each refresh. I have not seen anyone else have this issue in the comments below. Here is my situation:

1) PQ from Exchange Server to pull purchase orders

1.1) in edit Query I perform lots of table transformations to filter out columns/data that is not needed in my report.

1.2) Close and Load – creates table “Mail”

2) Added 5 columns to this table

3) Create “Input” query with added columns and close and load to connect only.

4) Merge queries to same “Mail” table (using auto generated “ID” column as the key from exchange data) with Left.outerjoin

5) delete duplicated columns PQ adds after merge (I.e comments1, etc)

I have the report set to auto refresh every minute since we get hundreds of orders throughout the day. First, I only used one table in excel for output/input and i had this duplication. Then I tried using two tables (PQ from Exchange and transform data into table 1 then PQ that to Table 2. Table 2 is then made into output/input using your method above with less table transformations since it’s referencing table 1) but i still get random row duplications that exponentially duplicate with each auto refresh.

Any advice on how to prevent this from randomly duplicating rows? Thanks in advance for your response!

You must have a primary key to join the tables. If not, duplicates will occur.

Thanks for the response! In your example in your post, the primary key is the unique ID column? Or is it something else I need to define?

It can be anything, as long as it is unique and comes from the source file. It could be a concatenated column combining date, description and amount if necessary.

Hi Matt,

can you explain why this works? When you try to use the result of an Excel formula inside the formula, you’ll get an error. Somehow this solution feels like it would also create an error. Does it depend on the order in which the queries are processed? Is there any risk that future versions of Power Query will break my table-with-comments?

Can I explain why?, not really; i just know it works. Effectively there are 2 copies of the table, to original and the one with the comments, and it sorts itself out. Power Query is not Excel. Excel formulas continue to iterate until there is an end point. Power Query doesn’t – it just does one iteration then stops once the table loads. It seems to always work. Yes, there is a risk it will stop working. It has already changed a few times, but it still works.

Matt,

This has to be one of the most glorious solutions I’ve come across. I’m a heavy user of Visio in my consulting role and needed to be able to make use of the data driven Visio cross functional flowcharts but needed to use the Excel table the Visio interacts with as a dynamic data source. As you may be aware Visio is notoriously finicky about any changes to the underlying table. For the project I’m on I needed to not only derive additional columns from the Visio data but also to extend the dataset out in order to build some semi-dynamic documentation. I’d been losing my mind trying to carefully extend the base spreadsheet only to keep having the link between the Visio and the Excel break.

Finding this self referencing method has completely solved the issue because I can simply leave the original Visio Excel table alone. I’ve built a second spreadsheet that uses the original table as a source but also extends on that table for the additional fields I need using the Visio Object ID as the primary key. I’ve managed to get a combination of calculated fields, mixed with free text fields (as per your example) and it’s completely saved the day!

Thank you so much for sharing this brilliant solution.

Bravo!

Stu

ITSM/SIAM Consultant

Thank you Matt for this post; Leila G pointed me to your site which I greatly appreciate (I took her PQ course took to find such nuggets – her course is great btw!!). I only found one other YouTube video referring to this topic (Doug H from exceltraining101.com). There are a lot of assumptions here (it should work, you should delete) which may not be straight forward! Have you tested the position of your “comment” column in reference to the original query? I would like my “comment” column to actually be almost at the beginning (2nd column to the left) of my original table (inserting the “comment” in column B); this seems to throw a curve ball to the process. I would love to hear from you if you have tested more scenarios on how the “comment” column position affects performance. My “source” table is a query from an external Excel file. Many thanks in advance! Yves

Sorry, no I haven’t tested other scenarios.

Matt, I have tested a few options:

1) I figured it is possible to add multiple “comment” columns (I needed to add 5 comment columns!)

2) when adding the “comment” columns, I created them first at the end of the table (far right); when I attempted to insert them in the middle or before the first column, the “comments.1″,”comments.2” replicas would pop up on every refresh. This seems to be a key step.

3) I created the simple self-referencing query as you suggest.

4) I merged my original query with the self-referencing using several data columns for my merge – I had no unique ID).

5) Upon the very first refresh, I deleted the extra “comments.1” to the right of my table.

6) I went back in to query editor and re-ordered the columns as I needed (I wanted the comments columns at the beginning).

The refresh now holds in place!

Thanks again for the great pointers Matt!

Hi Matt, trying to get this working… at the very start when you created table 2 from the source data, was that done just in the query or did it get loaded to the power pivot in the background? How did the Comments column get created in the first place? I can’t seem to make a blank column in power query, so have to make it in power pivot. but then I end up with a Comments.1 column in power pivot, even though in the excel page I have deleted it.

When I hit refresh the comments data I have put in just disappears.

You connect to the source, edit the query and “load to table in Excel”. This has nothing to do with Power Pivot. The comments table is then added by simply clicking in the cell next to the table as shown in the first image in the article. No where do I create a blank column in Power Query, nor do I use Power Pivot.

Funny thing, I just cooked up a similar solution a couple of hours ago. Similar scenario, user wanted to add new comment data columns to data imported using PowerQuery.

.

We have a unique row ID for the join.

.

I created a “comment” table that consists of the ID column and comment column.

.

Use PQ to import the source data, close and load to Excel/PowerBI

Use PQ to import the Comments table, close create connection only

Open the source data query, Merge it to comments query using a Left Outer join

.

Your self reference has 2 copies of the full table. My way only has the smaller number of rows with comments, but requires a separate comment input table. I’m not sure which is better. Your way may be a little “neater”.

(Oops forgot to include:) here is a link to description of my variation, which has a link to an example workbook

https://answers.microsoft.com/en-us/msoffice/forum/all/ms-power-query-importing-data/ab0e292d-c47a-4a0a-8455-115dadeac915

Hi Matt, this is awesome. Is there a way to connect the query table to the source table? What I mean is, my source table is really long and I want the users to make any changes they have on the smaller query table. Is there a way to relay those changes back to the source using a variation of the above?

This solution creates a “copy” of the source with an additional column that only exists in this new copy. It can only display the new column in this “copy”. It can’t “write it back” to the source. I am not sure if I understood correctly, or not.

Thank you Matt

In my situation, the source table is a live table managed by one stakeholder. My second table using your above self-referencing technique has additional columns that another stakeholder updates. Ultimately, they both have certain columns that (at this moment) can be updated by either parties but with the above structure cannot be updated on different tables. I was looking to understand if there was a variation of the above technique to make that possible. I hope that clarifies things.

The issue with a “copy” is, (in my beginner level experience), is that the source is a live document and cannot be maintained as such if I make a “copy” from my understanding.

Thanks again

I recommend looking for a different solution. Options include Excel Online, SharePoint and Power Apps. I’m sure there are other options too.

Hi this is just awesome. thanks very much for sharing. the addional columns i added included some formula fields i.e. Table Column C = Table Column A + Table Column B. This formula disappeared on refresh and only the data remained. So any newly added table columns will loose the formulas that were input. I set these formulas for the columns programatically and it works great. thanks.

Hi Matt,

this really is a fantastic solution ! Unfortunately when I tried to share the excel file with with number of colleagues via Sharepoint or OneDrive, working simultaneously in the file seemed to cause issues. as long as we only entered comments we could save/refresh the file. but as soon as we refreshed the query before saving we got an error message upon saving saying that the “file wasn’t uploaded because we cannot merge changes made by xxxxxx” and then the requirement to either “save a copy” or “discard my changes”. do you have any idea ?

Thank you so much !

I realise this doesn’t help, but this is a OneDrive issue. I actually hate OneDrive with a passion. Give me Dropbox any day! I can’t suggest anything other than to save first. One thing you could do is to make it a VBA macro workbook and add a button to save and refresh. This would prompt people to click, ensuring the required steps are executed in the required order.

Hi Matt, thanks for looking into my question, but I may have not been clear. We can simultaneously enter comments in the power query table and can save those comments but as soon as you run the query (before or after the saving) to link the comments to the other data in the table you can’t save anymore without getting the error message. so it really seems the query refresh action (which works) that cannot be saved. I could write a macro that ensures the required steps as executed in the right order, but I’m not sure it will work as it really seems the end result of running the query that cannot be shared. As long as you work alone in the file it works perfectly. I have not enough Power Query knowledge to understand what triggers this and if there is anything I can do about it. Thanks again !

Hi Matt, I did some further testing . if multiple users are co-authoring the file and user 1 has not saved his comments yet before user 2 saves his comments (and thus also refreshed his sheet with potential saved data from other users) and refreshes the query and saves the end result, the unsaved comments entered by user 1 are wiped out when he clicks the save button. I guess the save action refreshes his view with the end result of the query by user 2, instead of saving his own comments. Any thoughts for a potential solution ? Thanks a lot !

I can’t think of a simple solution to this other than to force a single person to edit at any one time, maybe with check in/check out the documents or turn off the collaboration features.

Hi. I group, index, filter a top 10, then sort by my variable gross value$. Using the old method it used to keep my list sorted correctly, now I cannot see any pattern and it appears random. Any ideas?

Sorry, I don’t understand what you are saying. Have you tried sorting in PQ before loading?

Hi Matt,

Followed your tutorial but I’m getting the duplicate columns issue. Tried deleting the second one that comes up but it keeps reappearing and the data that I’ve put in the first one shifts over. Does this still work or is there an alternative?

Excel Version: 365 MSO 16.0.12730.20144 32 Bit

Cheers,

George

It definitely works – I did it just the other day when recording a training video for Power Query Academy at Skillwave (in case you are interested in more comprehensive training) https://www.skillwave.training/courses/power-query-advanced/

I did notice a small change that could be the issue. After you merge the comments table, Power Query renames the new merged column that you extract and calls it comments.1 You need to edit the rename step so it keeps the original name without the .1

I tried it and also get the duplicate column, in which I delete after refresh reappears. I am wondering if there is a trick for this now. Office 365 ver 2004 (build 12730.20352)

It work actually, but the first time it rewrites all the comments in the second columns, yaiks.

Hi Matt

This is avery useful article, thank you for that.

I have folowed your implementaion staps and the only difference on my side is that afer merging the queries and loading table 2 back to Excel I end up with 2 “comments” columns as opposed to one as in your example. Is this expected in the latest version of Excel?

Thanks

Claudio Lopes

The behaviour has definitely changed a few times over the years. Have you tried deleting the new second column and then refreshing again? That certainly used to work. Please let me know. Also which build version of Excel you have

I have this problem too. Deleting the column and refreshing does not solve the issue.

OK, so it sounds like it doesn’t work anymore.

Actually, I just tried it and it worked for me. I am using Excel O365 Version 2003 Build 12624.20410

Thank you for this nifty little trick!! I just deleted the second row and refreshed.(after an hour of banging my head). It works great.

Hi Matt

Great article, thank you. Quick question: If the text in the comments box is a formula, is it possible to retain this formula on refresh?

No, it is not possible

Matt,

Can’t thank you enough for this clear explanation.

I went through this solution on many discussion forums / sites – but was finally able to grasp only after reading the way you have explained!

A major roadblock is cleared with this. I wonder why don’t Microsoft Technical Team make it a native functionality in their Excel programming. This is something that is obviously needed by many people.

Thanking you a million times.

Best Regards

I’m glad it was helpful

This is a great tip! I wonder though– is it possible to also have dynamic columns that also preserve data even if renamed?

This is what I have now, using Role and Group tables to define a RoleGroup matrix:

let

Role = Excel.CurrentWorkbook(){[Name=”Role”]}[Content],

Group = Excel.CurrentWorkbook(){[Name=”Group”]}[Content],

RoleGroup = Excel.CurrentWorkbook(){[Name=”RoleGroup”]}[Content],

#”Merged Queries” = Table.NestedJoin(Role,{“ID”},RoleGroup,{“ID”},”Removed Columns”,JoinKind.LeftOuter),

#”Expanded Removed Columns” = Table.ExpandTableColumn(#”Merged Queries”, “Removed Columns”, List.Skip(Table.ColumnNames(RoleGroup),2)),

#”Removed Other Columns” = Table.SelectColumns(#”Expanded Removed Columns”,{“ID”, “Client Internal Role”}&Group[Group LongName], MissingField.UseNull)

in

#”Removed Other Columns”

I can add, remove, and rename rows (Roles here) while preserving anything in that row in the RoleGroup matrix (provided the ID doesn’t change), but I can only add and remove columns. If I rename a column (Groups here), the column is renamed (or more accurately, replaced), but the data in it is lost.

Can you assist? I think the solution would be generally applicable to a lot of use cases. Thanks!

I can’t think of a way that dynamic columns could work. Maybe you can unpivot the columns? I’m not sure if that will do what you need.

Yes, the blue table is the source data created by converting the data into an Excel table. The second (green) table is the one generated by PQ. To move the green table, right click on the query in the query pane on e right, select “load to” and then specify the location.

Thanks for this tip. Do you know a simple way to change the “comments” column name without losing your data? When I change the column name, it breaks the connection and then the self-referencing query. Even if I manually change the connection (using advanced editor) to the new column name (let’s say “Changed name”), when the query is refreshed, all of the data is lost.

The general context for the question is that I am aggregating data from multiple bank accounts for analysis. This data set may change as my analysis progresses. Each transaction is given a unique ID in the original account transaction table. I pull each account table into a separate PQ and then append them all together in a master list (called pq_AllTrans). I then want to be able to analyze the data as I see fit (including adding and removing analysis columns, changing their names, etc.). It is critical that my analysis survive any changes to the underlying data set.

It is hard to say. My guess is that it will work as long as you change the column name in the original query(ies) before you load the table. Once you have loaded the table in Excel you won’t be able to change it prior to reloading it.

Hi!

I think I will be able to use this method as a solution to my problem. Although, I don’t understand this part of your method: “Note: Prior to completing the self referencing steps below, I used Power Query to create the second table from the source table. This was my starting point.” Could you tell how did you do this? Is the “second table” you are referring here the green table named 2. Power Query Table? How did you get the two tables side by side in the same sheet?

Thanks in advance.

Hi Matt,

thanks for this smart solution.

I’m identifying one problem with office 365.

When I sort the table or enter a new key in the middle of the source table I need to refresh the query two times before the new key is visible or the sorting is correct.

Do you have a hint why this is happening?

Sorry, I don’t have any tips. My best guess is the order in which the queries are executed is back to front, hence why the second refresh fixes it. Currently there is no way to control which queries are executed in which order natively in PQ. If it is a big issue, I guess you could write some VBA to refresh one at a time, in the correct order.

Hi, I tried this trick but as needed to have a kind of back log tracking of comments, but during the self-joining I have > 6 000 rows the file is inflating to gigantic amount Mb of memory. I think the recursive step is probably killing the process at each steps creating a table, nobody in the comments reported this kind of problem, it’s strange. May be I am doing something wrong. Tried also with duplicating the query and not loading from the table.

Interesting – I haven’t heard of that before either (but I also haven’t tried it with 6000 records). This would be a question for the superwoman of Power Query (Imke Feldman) from https://www.thebiccountant.com/. I will ask her if she can comment. Maybe it is a Table.Buffer fix? I’m not sure

Hi there,

6k rows would only create a problem for my experience, if there are complicated transformations before.

Buffering could solve the problem. So please “wrap” the first table expression (“PreviousStepName”) in a Table.Buffer like so:

Table.NestedJoin(Table.Buffer(#”PreviousStepName”), {“ID”}, ImportData, {“ID”}, “ImportData”, JoinKind.LeftOuter)

Missing keys could also slow down a join (although it shouldn’t be a problem with 6k rows). But anyhow – removing Duplicates would solve that problem like so:

Table.NestedJoin(

Table.Buffer(#”PreviousStepName”), {“ID”},

Table.Distinct(ImportData, {“ID”}), {“ID”},

“ImportData”, JoinKind.LeftOuter)

What about append tables??? I have 5 sheets that are merged it 1 query. When i use your method it works. But when additional data is added in some sheet(s) it doesn’t works anymore.

So how to achieve this:

i have 5 sheets with data that will constantly add new data. I want 1 query that will append this into one table but with function that i can add comments.

Well you need a unique key on each row to make it work. If you inserted new rows, as long as the keys don’t change and the new rows get new keys, I don’t see why it wouldn’t just work.

I desperately need this to work for me – but I don’t get the same results??

I’ve created a simple table in Test.xls, Then loaded in Test 2.xls using Power query.

Added the additional column, loaded from table, merged with itself etc.

But see the behaviour in this video I posted on YouTbe.

https://youtu.be/gMOR5dK90Zo

I can’t see what is happening under the hood. If you would like to post the sample files (both the source and the PQ workbooks) I will take a look

Thank you for sharing. I think I encountered the same issue as others here : if i cancel the new column, the columns are independent again and new sorting of datas leads to a mess. So I kept (and hired) the added column and it seems to work 😉

But did you really have this idea rolling around in your head for a year prior, Matt? You totally stole the technique AND the name from me. Sorry, but this has been bugging me for years lol

social.technet.microsoft.com/Forums/systemcenter/en-US/07065e1b-f1c8-43c5-82b5-d623990613c5/query-table-column-width-resizing

Exactly what I was looking for! Thank you for the post.

Hi, Matt! It’s a really powerful trick, thanks a lot! I have an another such an issue that I can’t handle. I need to change an already loaded value (let’s say date in your table 1) and that new value should be fixed. May be you could give me an idea to solve it..

Sorry, I don’t understand the scenario

Hi, Matt, scenario is simple. I have a raw data from a DB. And I know that this field in this row is incorrect. I haven’t an opportunity to correct DB, but PBI model should be correct. I need a way to make changes on fly either it’s a fact-table or a dimension-table. Now I make an algorithm with row substitution from a table but it’s a little bit clumsy.

I guess it depends on the issues, but It sounds like you could create a substitute table to map the errors and convert on load.

Many thanks for reply, Matt! I’ve worked out such approach, but it would be great (in my dreams) to make it so easy as in your self-referencing solution (Just make changes in output table) 🙂

Matt,

In my sales data among Customer ShipTo names there is some redundancy, such as “ABC Cleaners” and “ABC Cleaners Inc”. I don’t have access to the primary source to remove duplicates there. Each month I get an updated file. I want to keep the original Customer ShipTo names, so I use your excellent method above to build a crosswalk/lookup table then build a relationship between [CustShipToName] (original w duplicates) in the original table to [CustShipToMergedName] in my lookup table. Works fine in Excel PowerQuery as you show here.

Can this be done in Power Bi desktop? Imke posted that Power BI makes self-referencing tables easy, but what about your method of adding an editable column to a self-referenced table: can this be done in Power BI Desktop? I’m relatively new to Power BI, and haven’t found a way to easily edit individual cell values (without writing a new function step in PowerQuery for each edit) in the manner needed to update values in my crosswalk table. If I want to run my report again, this time merging sales of XYZ Co and XYZ Assoc because new information tells me they are same company, how best do I do this in Power Bi using your method.

My workaround is to use Excel to create the lookup table, write the results to separate CSV file, then add it to Power BI. Can you think of a better mothod?

Thanks,

Dan

Good question Dan. There is nowhere in Power BI that has a “cell” reference where you can take an extract from a query and then manually add data into a new column. So you can do it the way you are doing it (which is not bad). Another approach I could think of (not quite as good, but not bad) is.

1. Write an “enter data” query to create your substitute table and load it to power bi

2. Write an audit query that returns the new values that you need to match that currently don’t have a substitute. Return those values to an audit table in Power BI.

3. When you refresh, check this audit table. If it contains any values, copy the values to the clipboard

4. Go back into the enter data query, paste the new records to the bottom of the table and manually add the substitutes

5. refresh and make sure the audit table is empty. You could even put a warning flag on your report that tells you when there are records in the audit table.

Could that work?

Matt my name is Samir and İ live in Azerbaijan. You cant belive this is what I was looking for many many month. I also added comments into my power query results and after sorting data was moved. I could not understand what is going on. Now I imagine why this occur. You are fantastic!!!! Thank you very very much!!

I am trying to use this approach to update a table with daily excel files with closing stock price info but something is wrong and at some point it doesn’t work. let’s assume i start with daily stock data file stored in a workbook and read from it and import the data in power query. i then clean my data and load it to a table. then i create a new query from this table and add a new column using Date.ToText(#date(2017, 8, 11)) where essentially i say to power query that the specific file it will read from and add to the db will be for the 11th of Aug, then another one for the 14th etc…

next step – per your post – I create a connection and then try to merge the original table with the new one and expand the date field only but i get “null” for dates. is this because during the merge i do not have unique ids? should i create an extra column with let’s say the stock ticker and the custom date e.g. MSFT11/8/2017 ??

thank you in advance for your help.

best regards,

Zaf

Zaf, my technique has unique IDs and also the new data is manually added in Excel, not Power Query. It sounds like you don’t have IDs and are trying to automatically add data. If you can automatically identify the columns in Power Query before loading to Excel, and then if you manually add data (data, not formulas) in Excel then I see no reason why it wont work. If that is not what you are doing, then you will simply have to test it and see what you can work out.

Thank you for the quite useful post! I am trying to use its logic and get stock market daily data from the web and from another source (sap bi). I am not sure though what would be the best way to flag the date as in some cases I might pull the data on a later date (when downloading from sap).

1. let’s assume i want to update a daily price table and due to vacation i delayed the update for a whole week. once i start downloading the daily data, i want my query to read each day and add it to the db

2. in the case of the web update, i link the original source to the website and need to re-visit this daily so that it adds the new data and creates some history. what is the optimal way to do this? is there a way to schedule the data import on a daily basis even if i am not in front of the pc?

thank you in advance for your support!

in point 1, you say “add it to the db”. Where is your db? Excel is not a db and you can’t use this technique to use it as a db (as far as I can tell anyway)

Hi Matt.

Wrote a post on Microsoft Communities. A lady replied.

Her solution was to simply delete the additional columns that appear, denoted with the ascending number sequence.

The query worked after that. Tried again from scratch and the numbers still show up again. At least deleting the columns fixes the issue.

Guessing it is a bug or addon issue.

Thanks again for a very useful post. Have implemented it at work on a multi-file and multi sheet query. It is working like a charm.

Cheers

The same problem. Something is wrong with Excel 2016

I have Excel 2016 and last time I tried it worked fine. If you can share more information about the problem I would be happy to look

Hi. Great and helpful post.

Have it working, with one minor issue (tried 15 times mirroring your data and following exact steps).

When completing the final step, after clicking load and close (after the merge), an additional Comments column shows up called “Comments2”. Everything works fine (sort and filter, open and close and the additional data remains within the relative record), but can’t get rid of (or hide) the Comments2 column.

Also tried the same above while adding in 15 additional columns. The same thing happens where the column name is replicated and a 2, 3, 4…n is added on at the end of the table.

Any suggestions? Thanks in advance.

Interesting. It seems it is identifying the 2 columns as being different. What version of Excel are you using? If you can share the workbook, I will be happy to take a look.

Hi Matt.

Excel version is 2016. Tried again this morning, same results.

Here is a link to the file, including some notes: https://drive.google.com/open?id=0B1SmfAQFXi1fTjdLUFZaYlg5cTg

Appreciate your assistance

Hi Ryan

I can’t actually work out what is wrong, because the issue already exists and the self referencing already picks up both copies of comments2. I have taken your data and recorded a video of my process – it works for me. So unless there is something strange about your version of Excel 2016, I can’t work out the issue. Can you try by copying my video.

https://www.dropbox.com/s/h5349huo5rkf3hc/Video_2017-01-09_075555.wmv?dl=0

Hi Matt,

Thanks for going through the extra work to make the video while testing the code. Much appreciated.

Our steps are exactly the same. Couple of notes:

In the video, at 1:54, you can see the table expand, then contract (image link below). It seems my version of Excel is not doing the contracting part (or removing the “Column2” column)

https://drive.google.com/file/d/0B1SmfAQFXi1fQzdkVDZwdkJSWHM/view?usp=sharing

Curious what your code looks like on the Comments query. Noticed after refreshing that the “Column2” column appeared.

Image screen shots show the changes. Odd the code doesn’t change.

Pre-refresh: https://drive.google.com/file/d/0B1SmfAQFXi1fQjFhMkliTVNGdEU/view?usp=sharing

Post-refresh: https://drive.google.com/open?id=0B1SmfAQFXi1fcHZ0cG5rQm16RDA

Pre-refresh Code: https://drive.google.com/open?id=0B1SmfAQFXi1fU21CYmprb2pGd1k

Post-refresh Code: https://drive.google.com/open?id=0B1SmfAQFXi1fak5vNG1rU2gxbWs

Excel: 2016 Version 1609 (Build 7369.2095)

Looks like a tech support question for Microsoft. If I learn anything, will update the post. Thanks again for sharing this very useful query tool!!

Cheers

My code looks just like yours. Very strange. I even added a second column like you did and it still worked my end.

let

Source = Excel.CurrentWorkbook(){[Name=”Data4″]}[Content],

#”Changed Type” = Table.TransformColumnTypes(Source,{{“ID”, Int64.Type}, {“Date”, type datetime}, {“Amount”, type number}}),

#”Merged Queries” = Table.NestedJoin(#”Changed Type”,{“ID”},Comments,{“ID”},”NewColumn”,JoinKind.LeftOuter),

#”Expanded NewColumn” = Table.ExpandTableColumn(#”Merged Queries”, “NewColumn”, {“Comment”}, {“Comment”})

in

#”Expanded NewColumn”

Let me know if you hear something from MS

As a follow up for this issue: https://answers.microsoft.com/en-us/msoffice/forum/all/excel-power-query-self-referencing-query-issue/7bd23d22-33ce-4726-8321-005e444d094a

I was having the same issue. What made the difference for me was adding the comment column to the end of the table like Matt did in the video above. I had been inserting a “Comments” column into the second column of my source table in excel. Once I did that the “Comments2” column stopped showing up. I also made sure I put in some sort of test comment.

Very nice blogpost!

In order to prevent messing by accidental multiple loads you can add a Remove-Duplicates step on the index – just to be on the safe side 🙂