Until recently, I had not spent a lot of time building Power BI Reporting solutions that have SharePoint Online as a data source (This article refers to SharePoint Online specifically, but I am sure the experience is the same with on premise SharePoint). Over the last month or so I have been working with a few customers that are using SharePoint for the storage of source files in document libraries. On the face of it, it seems like a great idea to leverage SharePoint as a storage location for CSV and Excel files.

- Everyone has easy access to the files for editing and storage

- SharePoint manages version control, check in, check out etc

- SharePoint can facilitate shared editing of files

- You can build a Power BI report that will refresh online without the need to install a gateway.

Unfortunately, despite the benefits, the experience is not great. If you use Power BI you may have noticed that Power BI performance with SharePoint as a data source is simply terrible. Ultimately, the problems come down to performance in 2 areas.

Issue 1: Refresh Speed

Firstly, there is the refresh performance. SharePoint has an OData API which is not very performant (same with most OData APIs, actually). When you try to refresh against SharePoint, you will find the refresh is going to be slow. Now that doesn’t mean it is not workable, because refresh happens in the background, after all. In my testing and practical experience, the performance of SharePoint refresh drops off rapidly as soon as you create a number of sub folders in a document library. Stated differently, if you have your files in the main folder of your document library the performance is not too bad, but as soon as you create a few sub folder branches, performance drops away rapidly. A Power BI workbook with a number of sources seems to take 4-5 times longer to refresh when compared to the same workbook pointing to a file server.

Issue 2: Query Editing/Maintenance Speed

This second issue is much bigger than the first issue. When you have many SharePoint connections in your PBIX file, the query editing experience is woeful. Power Query has a few tricks to improve the experience, such as keeping a cached copy of the data for preview purposes. Despite this, I have found that editing queries connected to SharePoint to be so slow that it is literally unworkable when compared working with local files on your PC.

Actually, there is one setting in Power Query that you can alter that can improve the situation a bit.

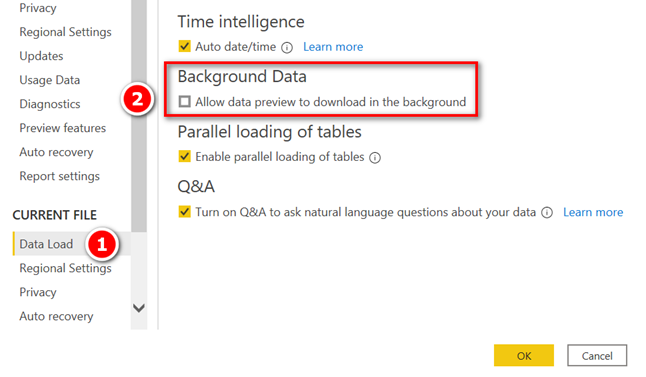

Go into File\Options and Settings\Options

Go to the current file data load (1 below) and turn off background data (2 below).

This is definitely worth doing, but in my experience, it didn’t solve the SharePoint performance problem. I lost so much time trying to build queries with connections to SharePoint that I simply had to find another way.

Sync Local Files Instead

The best alternative I have come up with is to sync the files from SharePoint to a file server using OneDrive.

- Use OneDrive to sync a copy of the files from SharePoint onto a file server that can be accessed via a Gateway. This can be the same server used for your gateway or a file server on the network.

- OneDrive keeps a local copy on the file server in sync with SharePoint.

- Load the files from the local PC rather than from SharePoint.

This is the best option but comes with some new problems.

- One of the benefits of storing your files on SharePoint Online is that you don’t need a gateway at all. If you take this approach, then you may need to arrange for a gateway to be installed.

- If you replicate the files to the gateway machine, you probably will need to have a different file location on your PC for development and then change the file path when you deploy.

- Thirdly, there is an issue (if not a bug) where you cannot connect Power Query to the root folder of a synchronised OneDrive Folder from SharePoint.

eg, if you sync SharePoint to you PC as

C:\Users\Matt\Excelerator BI Pty Ltd\Excelerator BI Pty Ltd Team Site – Documents

you can connect Power Query to

C:\Users\Matt\Excelerator BI Pty Ltd\Excelerator BI Pty Ltd Team Site – Documents\Sub Folder\

but you cannot connect to

C:\Users\Matt\Excelerator BI Pty Ltd\Excelerator BI Pty Ltd Team Site – Documents\

Just keep that in mind – I lost an hour or so trying to discover why it wouldn’t work.

Also note: If the files are to be stored on a file server, you need to use the full network path to the files, not the mapped drive version. E.g. something like \\fileserver\department\folder name instead of n:\department\folder name

There is another way

There is another way to solve the SharePoint problem. That is a bigger topic and I have covered that off in my next article. For now, I just wanted to assure you “it’s not just you”.

I am new to Power BI and we are considering upgrading our current licenses, however I have experienced some issue form the get go. As a start bot the data source and the pbix. files are saved on share point. I did some changes like add icons, formatting, and created new measures etc. opened and closed the files on numerous occasions without any issue, then this morning I opened the pbix file again and all my changes where gone. Like the the files reset to a previous version……..there is no history under versions. It is literally like I never did the work. Has anyone ells experienced something similar. Is this a BI or a sharepoint issue?

Hey Matt,

Great article and very informative! I have had a lot of positive experiences with Sharepoint Online lists and PowerBI reporting. Curious if anyone has had similar experience below with using this Sharepoint Online site collection list available from SP Admin Center?

Issue

Ran into a recent similar “data refresh” related issue with PowerBI connection to the Sharepoint Online Site Collection List (DO_NOT_DELETE_SPLIST_TENANTADMIN_AGGREGATED_SITECOLLECTIONS) that contains all of your sites by Hub.

Have a requirement to build more intuitive site search page using PowerBi and this SP list instead of using the out of box Sharepoint search at top of page. PowerBi report works great but even with data refreshes setup, I have been unsuccessful get to the PowerBi to update the dataset with latest sites being created. I have turned off all filters to be sure it was not querying specific hub, etc….

Thanks and any thoughts?

Sorry, I don’t know. Probably best to ask at https://community.powerbi.com/t5/Desktop/bd-p/power-bi-designer

I’ve been using SharePoint Lists as a data source in Excel since 2016. A few months ago, I decided to try out Power Query, Power Pivot and DAX to see if this would tackle some performance issues that I started to encounter in Excel. I also wanted to publish reports on Power BI. I took the opportunity to normalize all tables and to build a clean data model. After all this, I was ready to give Power Query a try and I immediately ran into unbearable refresh time issues to the point where Power Query became unusable with SharePoint Lists as the data source. I nearly gave up and I don’t understand how such a situation is even possible: please Microsoft, you need to address this!

But I would like to share my current workaround:

I don’t use a Power Query connection to SharePoint anymore (Data > Get Data > From Online Services > From SharePoint Online List)

1) I’m using the good old “Export to Excel” method that generates a Microsoft Excel Web Query File (.iqy) method. I store these connections in a library with Microsoft Office Data Connection (.odc) files so that I can easily reuse them.

2) I then use the table created by ODC/IQY as the data source for Power Query (Data > From Table/Range). This is much faster with nearly instant refresh times and I can use Power Query and its features without having to listen to my beard growing 🙂

Any comments on this?

François

I will email you

Hi Matt,

Reading this specific blog brought tears to my eyes. I was heading myself that why is Sharepoint so slow in PowerBI. Now I can Rest in peace thinking that I am not alone in this.

Now comes to the question which I might need help.

We have pdf files uploaded in the Sharepoint folder for which there is a mandatory metadata field/column which User needs to fill while uploading the files.

Can you tell me how can I load that particular metadata field/column which I’m not sure where to look when I connect to Sharepoint in query editor

I don’t have an immediate answer, but I assume when you first connect to the doc library, there may be some metadata columns. This may be a good future blog article (when I get some time)

Hi Matt,

I have been having my own nightmare using power bi with sharepoint. I have a few queries and I have found it unworkable. The time it takes to apply seems to double each time I add another query. I am sitting here waiting on the last one to be applied, it has been running for 3 hours and still hasn’t finished. I have lost days getting various different errors and/or not responding. Help!!!

Let me know if my solution helps you https://exceleratorbi.com.au/fast-track-power-bi-dev-with-sharepoint-files/

Thanks for sharing Matt. Can’t wait to hear the solution.

Just further to my last comment I imported an excel file from SharePoint using

Source = Excel.Workbook(Web.Contents(“https://mycompany-my.sharepoint.com/:x:/g/personal/joshuabrown_mycompany/xxxxxxxxxxxxxxxxxxxx_xxxxxxxxx?e=xxxxxx&download=1”), null, true)

This uses a direct link (you need the &download=1 at the end to force the download). I don’t know if this is better or faster, but it might be worth investigation.

Interesting, I have not used SharePoint much but for simple dimension files it works fine for me. I have been thinking of moving away from file servers though for dimension files maintained by business teams as it facilitates working away from the office without having to go through clunky VPN’s.

I have used Python to download files from SharePoint to a network drive. You can do this using the requests package but you need to create a direct link url for each file. I haven’t tried this in Power Query so not sure if using direct url link is possible.

I personally have problems with PowerBI and Sharepoint too. But although the responsiveness is bad as is, my bigger issue is accuracy.

I have a 2GB Sharepoint CSV file that I was connecting to. It needed 20mins to refresh my report, but I was willing to wait. But then, one of my report users noticed that they were missing data from the overall counts. Strangely, the issue went away when I connected to the same 2GB local file.

Not sure if anyone also experienced this, but really large Sharepoint datasets seem to be missing rows upon refresh.

I’ve never heard of that, but it sounds really bad. If you can reproduce this, I suggest you log a bug with Microsoft.

I have been switching from using Sharepoint.Files to Sharepoint.Contents when our Sharepoint libraries start growing bigger. It helps the performance a little bit but still pretty slow and painful overall.

This is what I do: I create a parameter source that it is a “switch”. So, in development mode, I toggle the switch to read local files. Before I publish, I change the parameter to point to the SharePoint version of the source.

Matt

I experienced the same problems. Instead of “SharePoint.Files()” I connect directly to the individual files. I wonder if that’s what you are going to show in the next blog or if you have another (even better) trick… Looking forward to your solution!

Dominik.

I’m bookmarking this, because this is such a horrible scenario that I’m just going to point people to the article rather than every try to do it next time. Thank you!!!

Hallelujah, praise Matt Allington. My god I’ve torn my hair out so many times over how slow it is to build a query on a SharePoint-based source. Can’t wait to see the solutions you’ve come up with next week. I have no idea why, but hearing “it’s not just me” is some small comfort. Misery loves company I suppose.

I’ve built a 2-file query where part 1 is an Excel file that pulls data in a rawest form out of SharePoint (which is still almost unworkably slow to build) and then a second PowerQuery in a completely separate file that uses the first Excel file as the source. The second file is where I do all the actual data cleanup and transformation needed.

Hi Matt,

Yes we ran into this problem. Everything just stopped working. I eventually did as you mentioned synced the SharePoint files to my local machine. I also moved my OneDrive Folder to a folder named OD that is on the C drive. So the Path is

Local Disk (C:) > OD. This way all our machines have the same path even if it is a different user. I also put my Sharepoint folder in the same OD folder and haven’t had any problems with the Sub Folders.

Thanks for the post, now I know it wasn’t just my problem.

Totally agree.

I’ve told our SharePoint administrator – SharePoint is a curse in my vocabulary. Unfortunately our organization is embracing it more and more and will become problematic soon.

With our set up, we have to sometimes ‘merge’ the data with some on-prem data as well, which for some reason the PowerBI Service wants to push it all through the Gateway, and on which I use a service Account to drive the refreshes in our service – but SharePoint online does not like our ServiceAccount, and therefore just cannot connect to it. If I use my own account, it would work because I’ve got access to the SharePoint site – but that’s not the solution.

Currently it’s a stay away zone for us.

The only workaround we have is to write a LogicApp in Azure to connect to it – and bring the data back on-prem where we can work with it and the push it back to the Cloud. What a waste of time and energy. These systems should be able to talk to each other much easier.

Completely agree, I spent some time really struggling using SharePoint as a container for my data source files so very pleased to hear it’s not just me. I then used data flows as the method to extract from SharePoint to then feed Power BI, been trouble free ever since.

Hi Sue. What is your experience when editing the dataflows? I assume that is slow too (eg if you have to do a file combine with many applied steps. I’m not so unhappy about the sync time, but the editing preview refresh/responsiveness

Hi Matt

I have been working with organisations who use Sharepoint as a key repository for their financial management data. The best way I have found is to map the Sharepoint folder to a spare drive. I have a simple macro that recreates the drive if I power off. I haven’t been working with large data sets so maybe haven’t felt the pain you are referring to yet! Looking forward to your next blog!

Yes, syncing to a file server is a good solution as long as you have a gateway

Thank you for sharing Matt. This is very valuable.

I have the same experience, and I think a lot have to do with all the metadata Power Query has to run through in the Sharepoint folder, if there are several files stored in that folder. So, my solution was to import the files to dataflows, and then connect to that instead. Looking forward to reading you next article, and how you solved it.

Yes, lots of feedback here about dataflows – that is a good option.

Matt

agree on the issues with Sharepoint ,mainly in getting them setup and issues with authority conflicts, slowing things down.

Have found that using data flows to source the data and keeping the non gateway sources in separate data-flows from the sources that go through the gateway has improved things, but you then have the downside of another refresh step in the process. but it is certainly more reliable.

I felt the same pain and finally have moved all of the heavy work into PBI dataflows (stitching a significant number of Excel files together which are all stored on a central SharePoint). Refresh can happen at a suitable time and I only link my data models to that dataflows in the end. Despite the extra step, this makes the whole experience a lot smoother.

Hi Matt,

I think you missed the biggest drawback. I access data from several sharepoint online sites using Excel and use several different sets of credentials. When I setup the request I have to enter my credentials and they are then stored (maybe in Credential Manager). All works fine and dandy.

However when I come back to my project sometime later, a refresh of the data may or may not work. When it doesn’t, the only way I’ve found to get access back to my data is to reedit EVERY query and reenter my credentials for eacn and every source. It’s a pain and a real time waster. I use reenter the same username/password combos (which should be stored for each of the sources), but for some reason I’m forced to frequently reenter them.

I don’t even know where to look for a solution to my problem.

thanks

Andy

I have shouted this from the hills and recommend using file shares for large files and SP only for small dimension/lookup tables. It doesn’t surprise me at all that SharePoint + Power BI is better because SharePoint is objectively terrible.

I’m Optimistic that this item includes new and improved SharePoint connectors: https://docs.microsoft.com/en-us/power-platform-release-plan/2020wave2/power-bi/analyze-power-bi-sharepoint-lists as the product team definitely knows this is a customer pain point

Thank you for sharing and providing such useful insights on sharepoint and Power BI and Power Query. Companies are planning or implemented Sharepoint as the sole source of truth for reporting.

Hi Matt. Thanks for this. I have been building some PowerBI using Sharepoint lists as a source – and have given up 2 months of my life to the woeful experience. The maximum number of rows in one table is about 2000. So not huge and the whole model is only 584kb. So tiny in comparison to other models I have. I did see that trick about turning off the background refresh, but it seems to have come back to bite me and I have had to turn it back on. I have been through pressing a query step and waiting for a response. At one point close and apply was taking up to 25 mins to action. It is really disappointing that two Microsoft products cannot interact well with each other. I was beginning to think it was me, as there is a noticeable absence of Microsoft content on this subject. But your article reinforces my pain. Thank you…. can’t wait till your next blog.

As you can see by the comments on this post, there are a lot of people feeling this pain. As I said, I have only recently understood the problem since I haven’t used SharePoint as a source much, and I also have seen much noise about it elsewhere. At least we all know now that we are not alone.

I have a solution for files – I think it can be applied to Lists too, but I will have to look into it.

Thanks Matt, I look forward to the next one. We have been leaning towards using more lists as dimension tables that lend themselves to being maintained by the average business punter. This article gives me some pause now.