In this article I am going to share with you a concept to manage refresh failures in production PowerBI.com reports. In a perfect world, you should configure your queries so that they “prevent” possible refresh failure issues from occurring, but also to notify you when something goes wrong without the report refresh failing in the first place. There are many things that can go wrong with report refreshes and you probably can’t prevent all of them occurring. In the example I use in this article I will show you how to prevent a refresh failure caused by duplicates appearing in a lookup table after the report has been built, the model has been loaded to PowerBI.com and the scheduled refresh has been set up using a gateway. If during refresh a duplicate key occurs in any of the Lookup tables in the data model, the refresh fails, and the updated data does not go live.

To solve this particular problem, I need to:

- Pre-emptively remove potential duplicates to enable the refresh to complete without failure.

- Keep track of any duplicate keys that may appear in the Lookup tables after the report is put into production.

I will explain how to manage such a situation with the Adventure Works database as the example. The end state is to have a set of queries that:

- Stages the lookup tables (don’t load this staging query)

- Branch a lookup table to load (Products in this example). Make sure duplicates are removed before loading

- Branch an Audit table keeping only the duplicates so I know a problem has occurred.

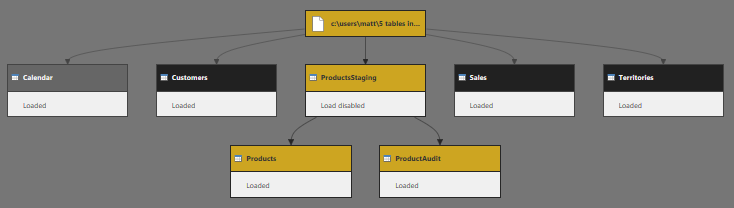

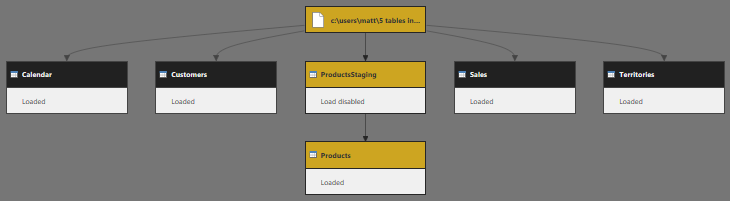

See final query workflow below.

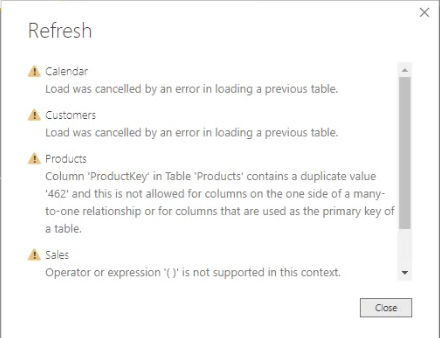

The Problem

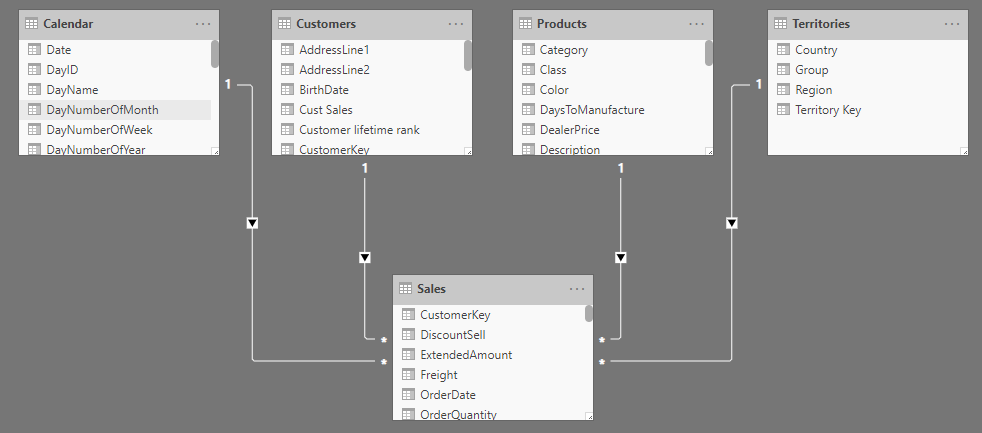

In the Adventure Works database, we have 4 Lookup tables and 1 Data table. Each of the Lookup tables have a one-to-many relationship with the Data table as shown below.

Let’s consider the Products table for example (the same holds for all the Lookup tables in the data model). Initially with this data model there are no duplicates on the Products[ProductKey] – otherwise you would not be able to create the relationship. But a problem can arise while the report is in production with scheduled refreshes. If a refresh creates a duplicate in the Products table, i.e. the ProductKey appears twice, the refresh fails preventing the new data going live.

The Solution

The solution as suggested earlier is to branch the lookup table query, then

- Pre-emptively remove the duplicates to enable the refresh without failure.

- Keep track of any duplicate keys that may appear in the Lookup tables after the report is put into production.

Editing the Products Query

To create the staging table:

- Launch Power Query

- Select the Products table.

- Go to the last step of the Products query, right-click and then select Extract Previous. This will move all prior steps in the current Products table into a new query.

- Call it ProductsStaging.

Now the new query ProductsStaging contains all the steps that were part of Products query and the Products query refers to ProductsStaging at the first step. You should set the staging query so it doesn’t load (turn off enable load).

In the Query Dependencies view, you can find that ProductsStaging table now feeds the Products table.

The trick now is to remove any potential duplicates from the Products table pre-emptively so that there will not be any refresh failures.

- Select the Products table.

- Right-click on ProductKey column and select Remove Duplicates.

It doesn’t matter if there are duplicates or not at this point in time. The Products table now has the Remove Duplicates step included as the last task, and that will prevent any duplicates flowing through during a refresh. This removal of duplicates is pretty brutal – it just keeps the first one and removes the second. Maybe it keeps the correct one, maybe not, but importantly the report will refresh. So at this point I would like to know if any duplicates occurred but were successfully removed by the pre-emptive step so I can go and fix the problem. For this I create a second table (branching from the ProductsStaging table) that captures the information on any occurrences of duplicates.

- Right-click on ProductsStaging table and select Reference.

- This creates a new query, call it ProductAudit.

I use the ProductAudit table to capture any occurrences of duplicates that occur after a refresh.

- In the ProductAudit table, click on ProductKey column.

- In the menu click on Home -> Group By.

- The grouping is by ProductKey and select Count column by Count Rows.

- This creates a 2 column table containing all the product keys and a new column indicating the number of occurrences for each key. Initially the column will consist only of 1’s as initially there are no duplicates.

- Next filter the Count column to keep only those rows with value > 1. i.e. you will then be retaining only those product keys that have duplicates. The table is empty at this time as there are no duplicates.

Close&Apply the changes.

Steps to Build an Alert

In the ProductsAudit table, create a new measure

Product Duplicates = COUNTROWS(ProductsAudit) + 0

The +0 is just cosmetic. It forces a 0 result instead of a blank.

- Create an audit page on the report.

- Place a Card visual on the report page and add the measure [Product Duplicates] to the Card.

The Card displays 0 as there are no duplicates at this time. If a refresh creates duplicates, the card will show a number >=1.

With this all set up, publish the report to Power BI and ensure that the Gateway and scheduled refresh are configured.

Managing Alerts

Alerts are configured in a Dashboard in PowerBI.com. So after publishing the new report.

- Pin the Card visual to an Audit Dashboard.

- Right-click on the Card and select Manage Alerts.

- In the Manage Alerts Panel, click on Add alert rule.

- Set the condition as Threshold to be 0. i.e. if Product Duplicates > 0, an alert email will get sent to me.

Having set this alert, I simulated an issue by changing my data source and adding a duplicate ProductKey in the Products table.

Bingo! I got the following email.

So the production report will be refreshed as planned and in addition I get an alert so that I know there was a problem and I can set about fixing it.

Wrap Up

I have showed the technique on a single lookup table. In reality, you would build this on any and all lookup tables likely to be an issue. You can also apply this technique to any other trackable data defect that may occur in your environment. If you have lots of different use cases you could modify the technique to append all the Audit tables into a single final table listing one row for each error, and some sort of error message to yourself.

Comments?

What do you think? What other production refresh issues do you need to trap and prevent? Any other comments?

Want to Learn more Power Query Tips and Tricks?

You can learn by enrolling to my Power Query Online Training. It is a self-paced course that has 7.5 hours of video content, worked out examples, and course material for you to practice your new skills.

Thank you for sharing this proactive approach. I’m facing the same issue and the reports failed with refresh due to duplicates in look up table. I’ll apply this on my datasets.

However I have a question with understanding the meaning of staging table. Why we can’t Remove Duplicates directly in the original look up table while using a staging table?

You can skip the staging table and it will work, but then you have no way of identifying you have a problem. The staging table “keeps” the problem and stores it, then passes the data to be “fixed” and loaded. Then you can take a branch from the staging table, filter for duplicates, and trigger a report that tells you there are now some duplicates. Without the staging table, you would have know way of knowing there’s an underlying problem.

hello good explanation. but i have problem when using this. I often get duplicate IDs on certain tables. i use a dataset. and I have worskspace and everything is scheduled refresh. Is it because there are many refresh times at the same time causing someone to fail to delete the data that is there. For real data I use DataView in my database. have you also experienced something like me ..?

thank you..

Sorry, I don’t understand. You say it doesn’t work – what is happening?

This is a great tip. Thank you for explaining each step to set alerts for these small issues that can become big problems.